Artificial Intelligence (AI) algorithms have revolutionized how we live, work, and solve problems. They power everything from voice assistants and self-driving cars to personalized marketing and medical diagnostics.

But what lies beneath the surface of these technologies?

How do these algorithms learn, evolve, and make decisions with such precision?

Let's break down in this article the mechanisms they use to learn from data, the intricacies of decision-making, and the ethical questions they raise. Whether you’re a tech enthusiast or simply curious about the inner workings of AI, this guide aims to demystify these complex systems and provide actionable knowledge.

Contents:

- Understanding the Basics of AI Learning

- The Role of Data in AI Training

- How AI Makes Decisions

- Supervised vs. Unsupervised Learning

- Real-World Applications of AI Algorithms

- Challenges in AI Decision-Making

- The Future of AI Algorithm Development

- Key Takeaways for Businesses

Conclusion

1. Understanding the Basics of AI Learning

At its core, Artificial Intelligence (AI) learning refers to the process by which machines acquire knowledge or skills from data and experience. Unlike traditional programming, where developers provide explicit instructions, AI systems learn by identifying patterns in datasets.

What sets AI apart is its ability to improve over time.

The more data these algorithms consume, the smarter they become. This unique feature is known as "machine learning", a subset of AI that drives many modern technologies.

Think of it as teaching a child to recognize objects in pictures. The more examples they see, the better they get at identifying them.

Neural networks play a key role in AI learning.

These structures mimic the human brain, with interconnected layers of "neurons" processing information and making predictions. For example, a neural network trained on handwritten digits can correctly identify numbers even when they’re poorly written.

To make this clearer, let’s take a real-world example:

When Netflix recommends movies based on your viewing history, it’s using AI algorithms that have learned from billions of data points to predict your preferences.

This ability to learn and adapt has made AI indispensable in industries like healthcare, finance, and entertainment.

2. The Role of Data in AI Training

Data is the lifeblood of AI.

Without it, algorithms would be like untrained athletes—capable of potential but lacking the experience to perform. AI training involves feeding large datasets into algorithms to help them understand patterns, relationships, and anomalies.

Structured data (like spreadsheets) and unstructured data (like text or images) both play critical roles in this process. For instance, training an AI model to detect spam emails requires labeled data—emails marked as "spam" or "not spam." This labeled data helps the algorithm learn what features distinguish spam emails from legitimate ones.

But not all data is created equal.

High-quality data ensures that AI models make accurate predictions. On the flip side, biased or incomplete datasets can lead to unreliable or unfair results.

Consider a hiring algorithm trained on historical data where certain demographics were underrepresented. The model might inadvertently favor certain groups over others.

Here’s a simplified breakdown of the data training process:

- Data Collection: Gathering relevant information from diverse sources.

- Data Cleaning: Removing errors, duplicates, and inconsistencies.

- Data Labeling: Categorizing information for supervised learning tasks.

- Data Augmentation: Expanding datasets with synthetic or manipulated samples.

Each of these steps is crucial in building AI systems that are accurate, ethical, and reliable.

3. How AI Makes Decisions

Once trained, AI algorithms transition from learning to decision-making. But how do they translate knowledge into action?

The process involves several key steps:

Data Input

AI systems take in real-world inputs, such as images, sounds, or text. For example, an AI-powered chatbot processes a user’s query.

Pattern Recognition

The algorithm identifies patterns in the input, comparing them to its training data. A medical AI might recognize abnormalities in X-rays that match signs of disease.

Probabilistic Predictions

Using probabilities, the algorithm predicts the most likely outcome. For instance, an email filter assigns a "spam probability" to incoming messages.

Action

Based on its prediction, the system performs an action. This could be as simple as flagging an email or as complex as navigating a car through traffic.

AI decision-making mimics human reasoning but scales it to process vast amounts of data instantly.

This process is iterative. Over time, as the system encounters new scenarios, it refines its predictions and decisions.

4. Supervised vs. Unsupervised Learning

AI algorithms primarily learn in two distinct ways: supervised and unsupervised learning.

Understanding these approaches is key to grasping how AI adapts to varying challenges and datasets.

Supervised learning is akin to learning with a teacher.

The algorithm is provided with labeled data, where each input comes with a corresponding output. For example, when training an AI to recognize cats in images, each image is labeled as "cat" or "not cat." The system learns to associate patterns in the images with these labels. Over time, it becomes proficient at identifying cats in unlabeled images.

Real-world example: Fraud detection systems in banking rely heavily on supervised learning. By analyzing historical data of fraudulent and legitimate transactions, they can identify suspicious activity in real-time.

In contrast, unsupervised learning is like exploring a new city without a guide.

The algorithm works with unlabeled data, finding hidden patterns and structures. It clusters data points into groups based on their similarities. For instance, an unsupervised algorithm might group customers into segments based on purchasing behavior, even if no prior labels exist.

Real-world example: Recommendation engines, like those on e-commerce platforms, often use unsupervised learning to suggest products based on customer browsing habits.

Both methods have their strengths. While supervised learning excels in tasks requiring precision, unsupervised learning shines in uncovering hidden insights.

In many cases, AI combines these approaches for hybrid solutions, such as semi-supervised learning. This versatility allows AI to tackle a diverse array of challenges, from image recognition to behavioral analysis.

5. Real-World Applications of AI Algorithms

AI algorithms are no longer confined to research labs; they’ve permeated virtually every industry. Their ability to learn and make decisions at scale has unlocked innovations that were once considered science fiction.

Let’s explore some groundbreaking applications:

- Healthcare: AI assists in diagnosing diseases, predicting patient outcomes, and even developing personalized treatment plans. For instance, IBM’s Watson analyzes medical records to recommend therapies tailored to individual patients.

- Finance: Fraud detection, algorithmic trading, and credit risk assessment are just a few areas where AI excels. Algorithms analyze vast datasets to identify anomalies or predict market trends.

- Retail: AI-driven recommendation engines enhance the shopping experience by suggesting products based on past behavior. Additionally, inventory management systems use AI to forecast demand and optimize stock levels.

- Transportation: Self-driving cars, powered by AI, process real-time data from sensors and cameras to navigate roads safely. Companies like Tesla and Waymo are leading the charge in this domain.

- Entertainment: Platforms like Netflix and Spotify leverage AI to personalize content recommendations, ensuring users discover movies, shows, and music they’ll love.

- Writing: AI tools like ChatGPT and Jasper AI are transforming the content industry by generating blog posts, marketing copy, and even creative pieces. These systems analyze trends and user inputs to craft engaging and optimized content.

- Recruitment: AI is utilized to match job openings with the most suitable candidates. For example, LinkedIn’s AI algorithms analyze candidate profiles, skills, and experience to suggest ideal matches.

Each of these applications underscores the transformative potential of AI. By automating complex tasks and enhancing decision-making, these algorithms are reshaping our world.

6. Challenges in AI Decision-Making

Despite their remarkable capabilities, AI algorithms are not without flaws. Several challenges can arise in their decision-making processes, often with significant implications.

Bias in data

One of the most pressing issues is bias in training datasets. AI models trained on biased data may produce discriminatory outcomes. For example, an algorithm designed to screen job applications might unintentionally favor certain demographics if historical hiring data is biased.

Lack of transparency

AI decision-making is often described as a "black box". While the system produces accurate results, its inner workings remain opaque. This lack of transparency can make it difficult to trust or validate its decisions.

Overfitting

AI models can sometimes "overfit" to their training data, performing well on familiar datasets but struggling with new, unseen inputs. This limits their real-world applicability.

To address these challenges, researchers and developers must adopt best practices, such as:

- Ensuring datasets are diverse and representative.

- Implementing explainable AI (XAI) techniques to improve transparency.

- Regularly testing models on fresh data to ensure robustness.

While these steps mitigate risks, the path to flawless AI decision-making is ongoing and requires constant vigilance.

7. The Future of AI Algorithm Development

As artificial intelligence continues to evolve, so do the algorithms that power it. The future of AI lies in more sophisticated, adaptive systems capable of tackling even greater challenges.

Here’s a glimpse into what lies ahead:

Federated learning: This innovative approach enables AI to learn across decentralized devices while keeping data secure and private. For instance, smartphones can collaboratively improve a model without sharing sensitive user data. Federated learning has profound implications for privacy-preserving AI development.

Explainable AI (XAI): Transparency is a growing concern in AI decision-making. Future algorithms will integrate explainability at their core, ensuring stakeholders understand how and why decisions are made. Industries like healthcare and law enforcement are particularly keen on XAI to build trust and accountability.

Ethical considerations: As AI systems become more integrated into society, addressing ethical questions will take center stage. Developers must ensure that algorithms align with human values, avoiding harm and promoting fairness.

The future of AI algorithms hinges on a delicate balance between innovation, privacy, and ethics. How this balance is struck will shape the role of AI in our lives.

From advanced neural networks to quantum computing, the possibilities are limitless. However, with great power comes great responsibility. Stakeholders must navigate this landscape carefully, ensuring AI serves humanity's best interests.

8. Key Takeaways for Businesses

For businesses looking to harness the power of AI, understanding how these algorithms function is crucial.

- Identify your objectives

Before adopting AI, pinpoint the specific problems you aim to solve. Whether it's automating tasks or gaining customer insights, clarity is key.

- Choose the right tools

Not all AI solutions are created equal. Evaluate platforms and tools that align with your business needs. For example, TensorFlow and PyTorch are popular for developing custom AI models.

- Focus on data

The quality of your AI’s output depends on the quality of your data. Invest in data cleaning, labeling, and management to maximize algorithm performance.

- Start small

Begin with pilot projects to test AI solutions. Gradually scale up as you gain confidence and see tangible results.

By taking these steps, businesses can position themselves to thrive in an increasingly AI-driven world.

Conclusion

Understanding AI algorithms is both fascinating and essential.

These systems, which learn and make decisions through complex processes, are revolutionizing industries and reshaping our daily lives. However, with this progress comes the responsibility to ensure ethical, transparent, and fair applications.

As we move forward, it’s clear that AI's potential is limitless. From transforming healthcare to driving autonomous vehicles, the impact of these algorithms cannot be overstated. Yet, realizing this potential requires a collective effort to address challenges and embrace innovation responsibly.

For individuals and businesses alike, the key lies in staying informed. By understanding how AI works and its real-world applications, we can better navigate this transformative era and unlock the opportunities it offers.

I'd love to read your thoughts!

Access Request Form

Access Request Form

Afterparty RSVP Form

Afterparty RSVP Form

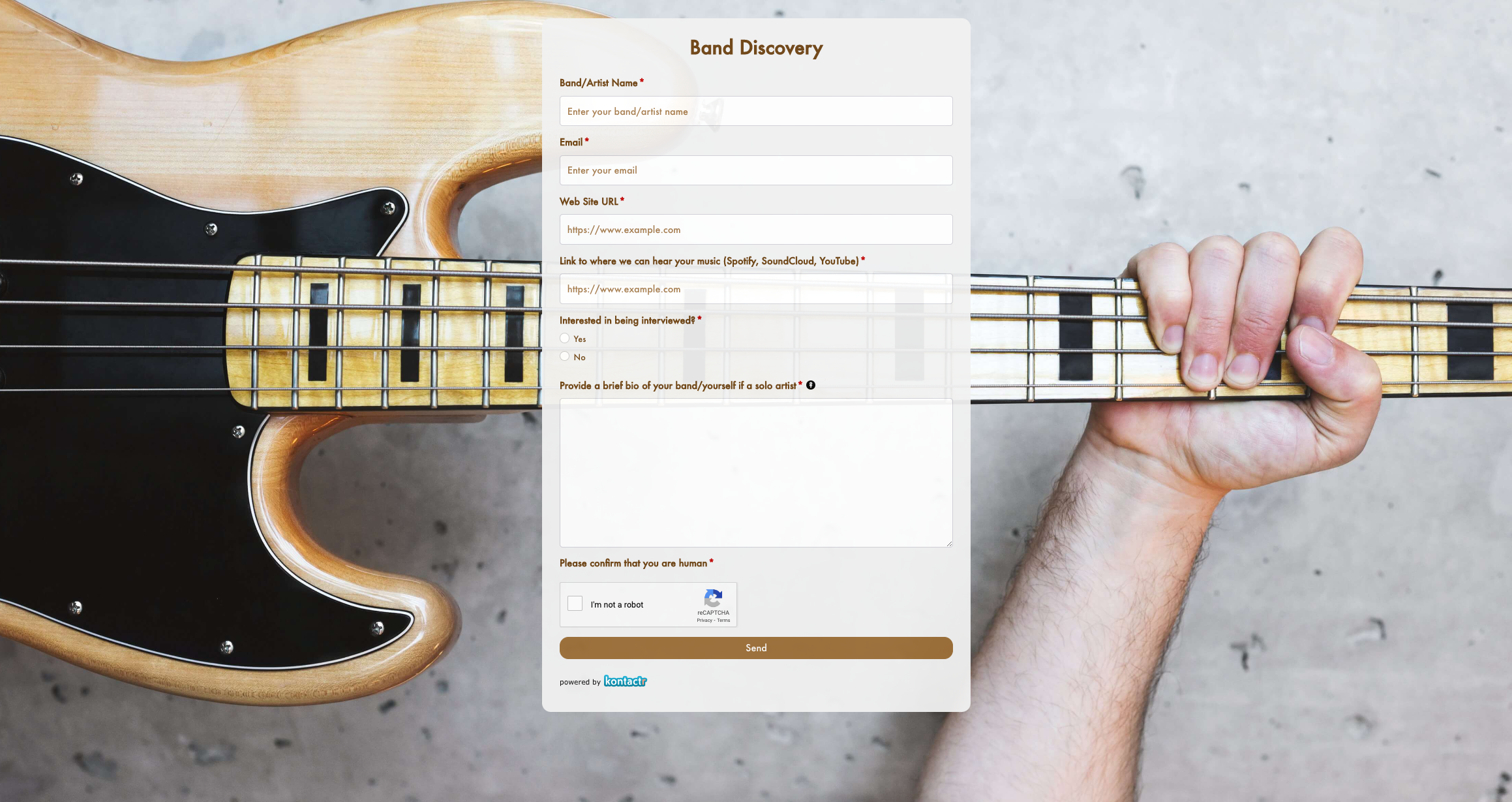

Band Discovery Form

Band Discovery Form

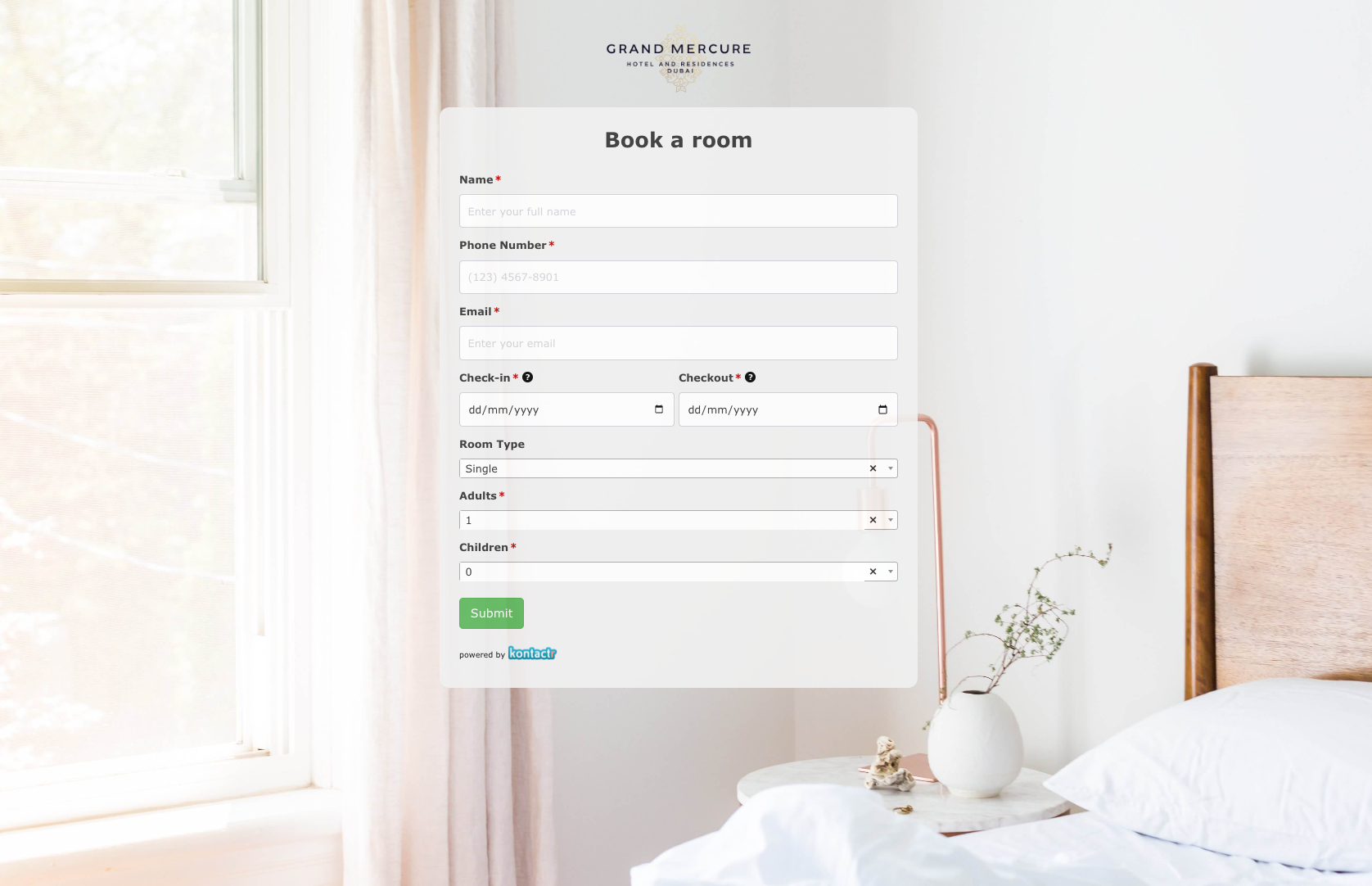

Book a room Form

Book a room Form

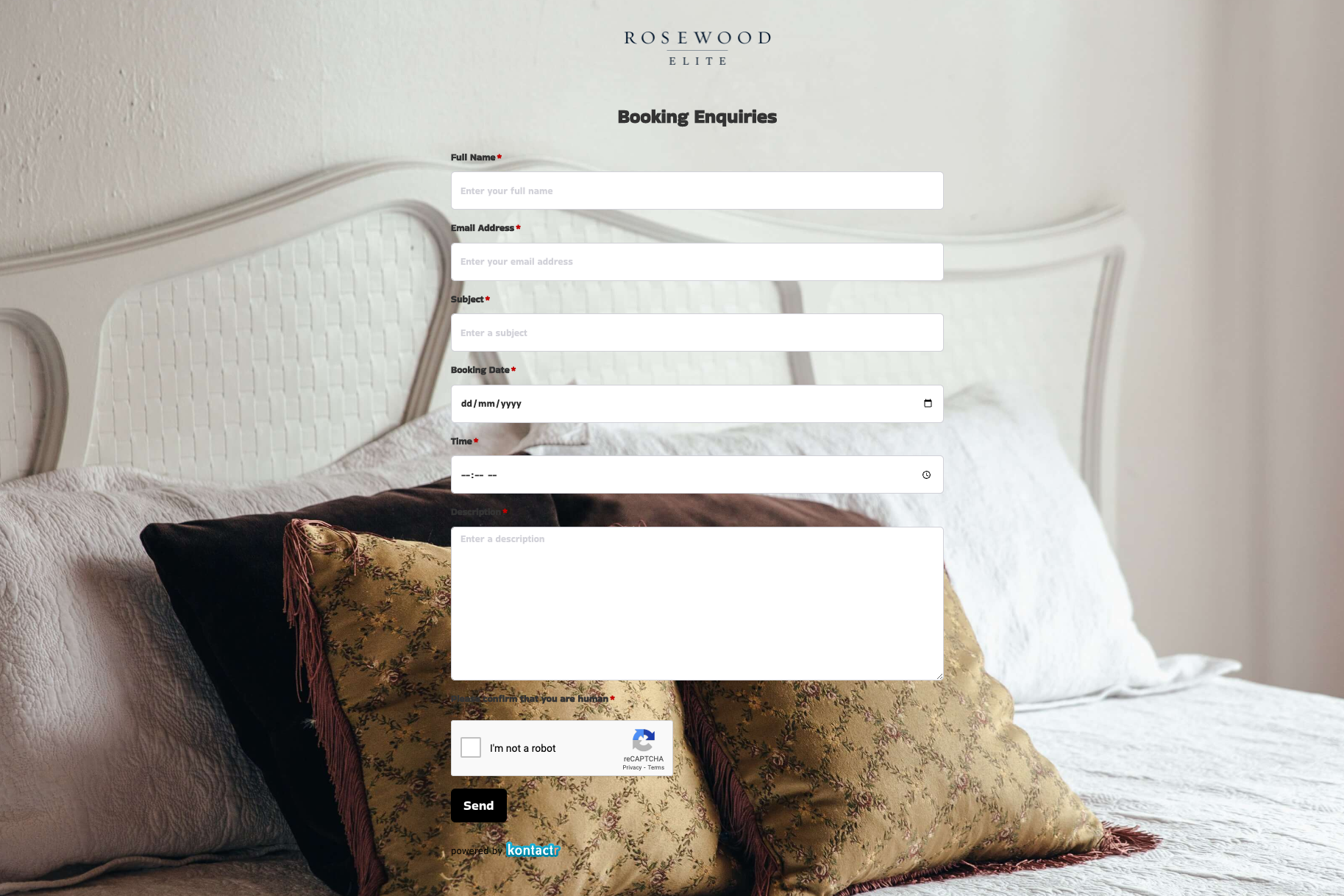

Booking Enquiries Form

Booking Enquiries Form

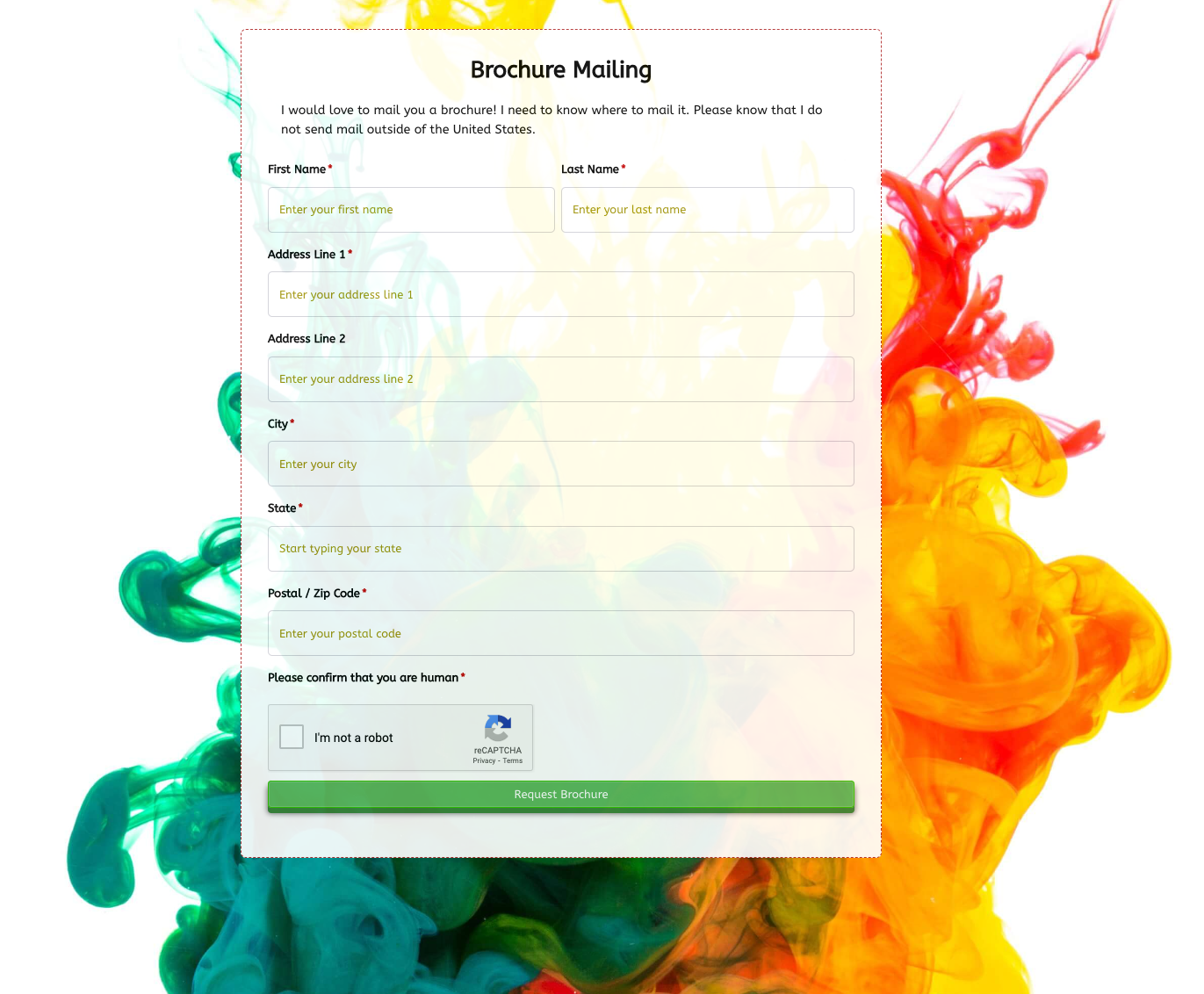

Brochure Mailing Form

Brochure Mailing Form

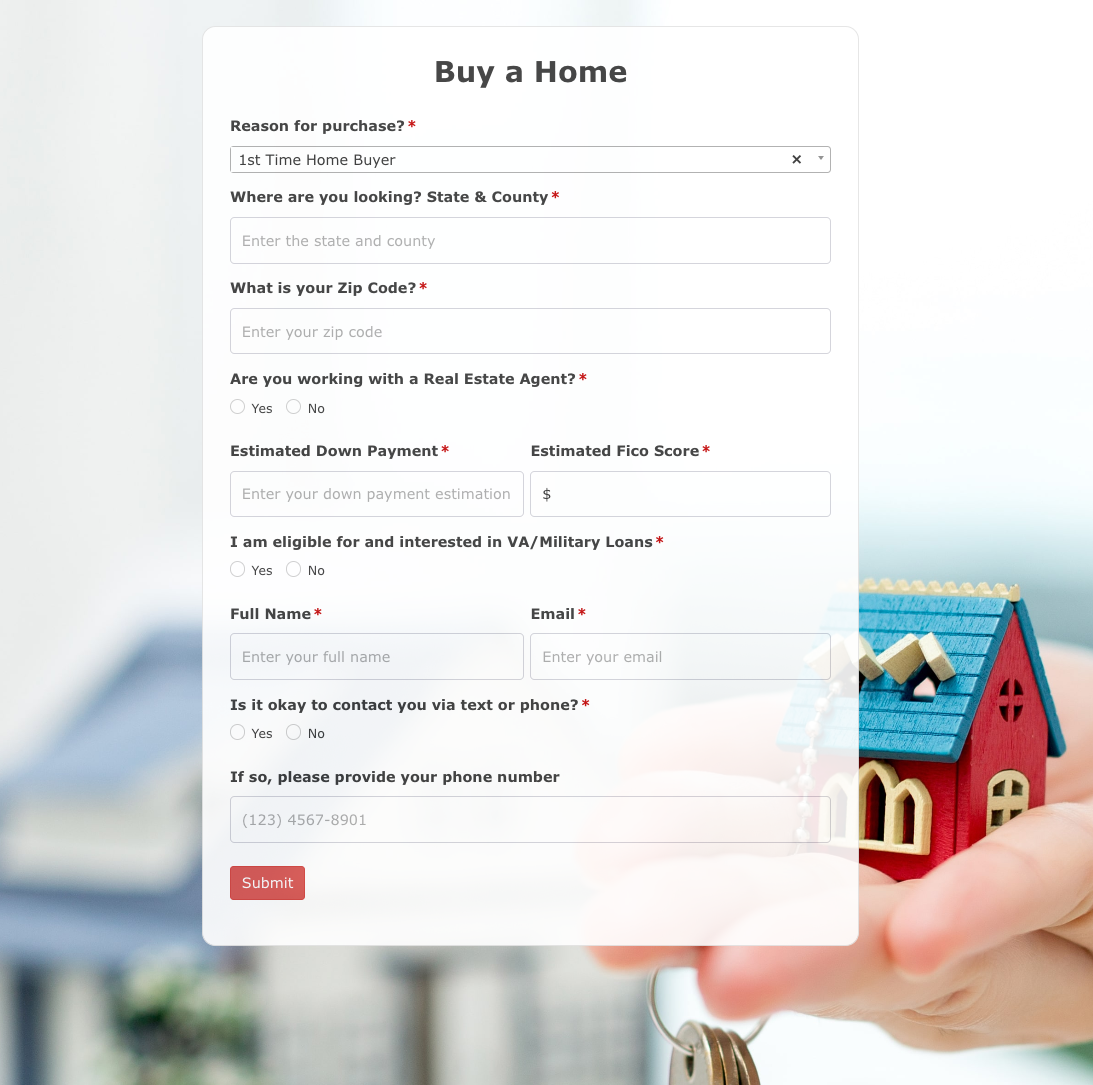

Buy a Home Form

Buy a Home Form

Catalog Request Form

Catalog Request Form

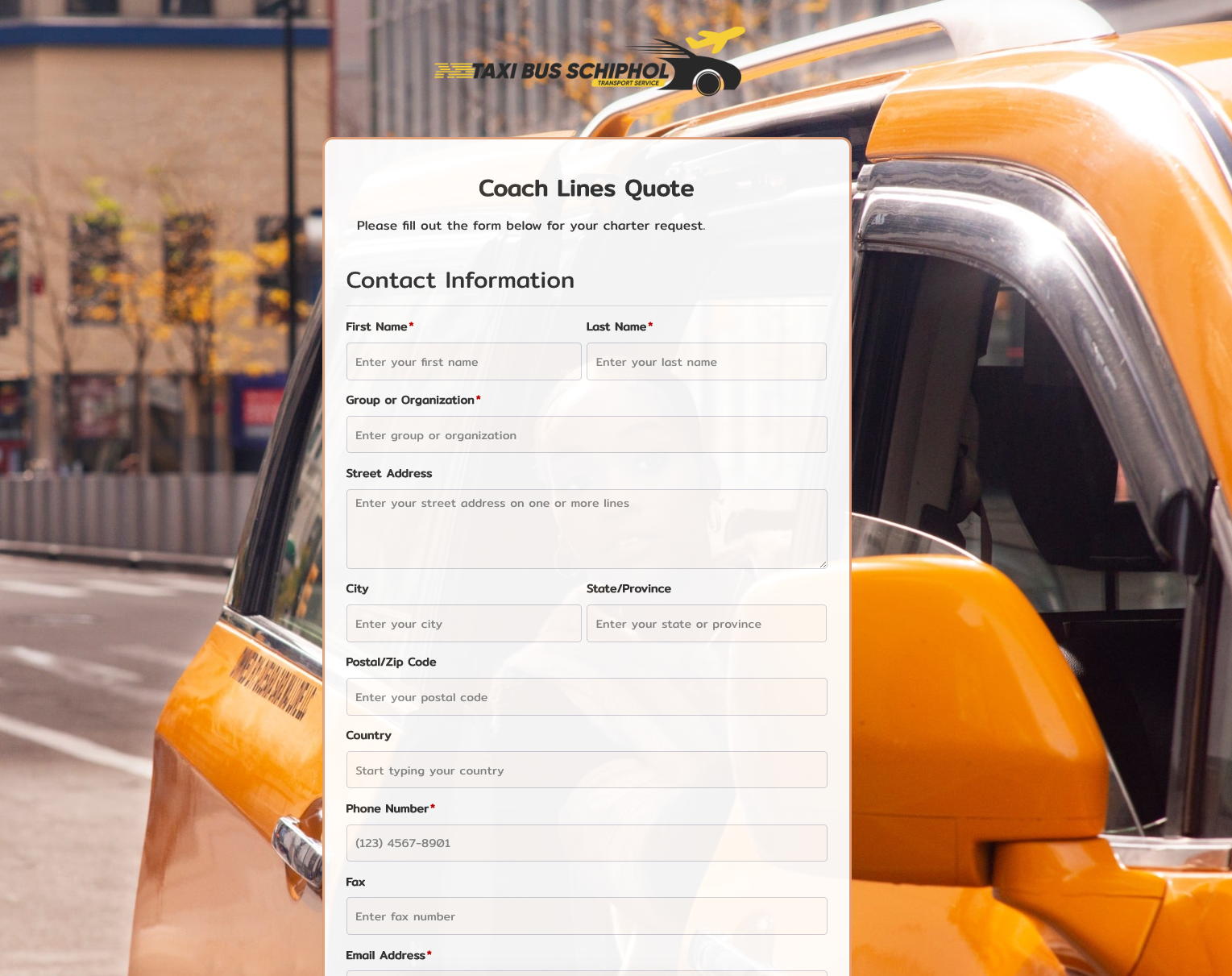

Coach Lines Quote Form

Coach Lines Quote Form

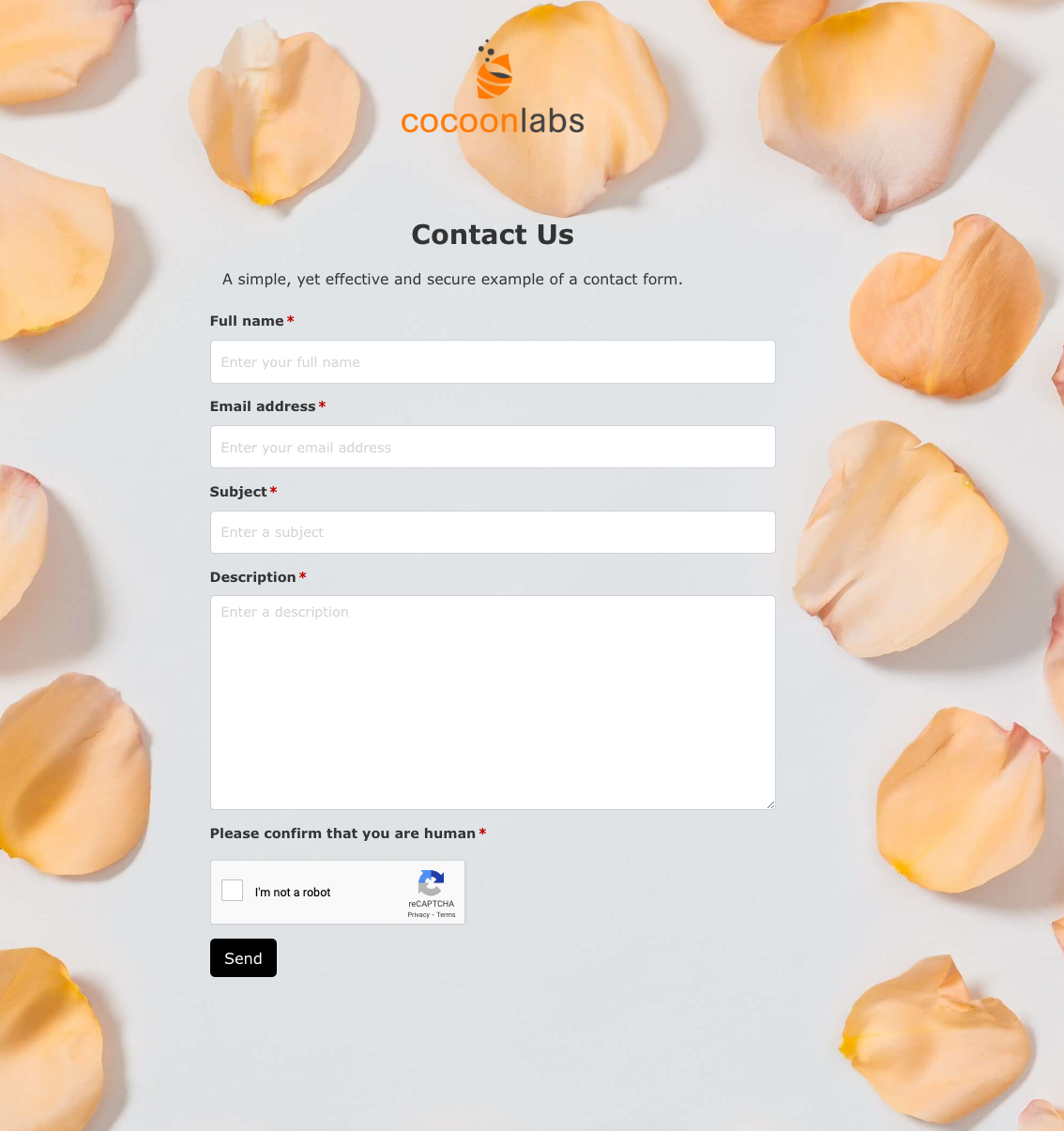

Contact Us Form

Contact Us Form

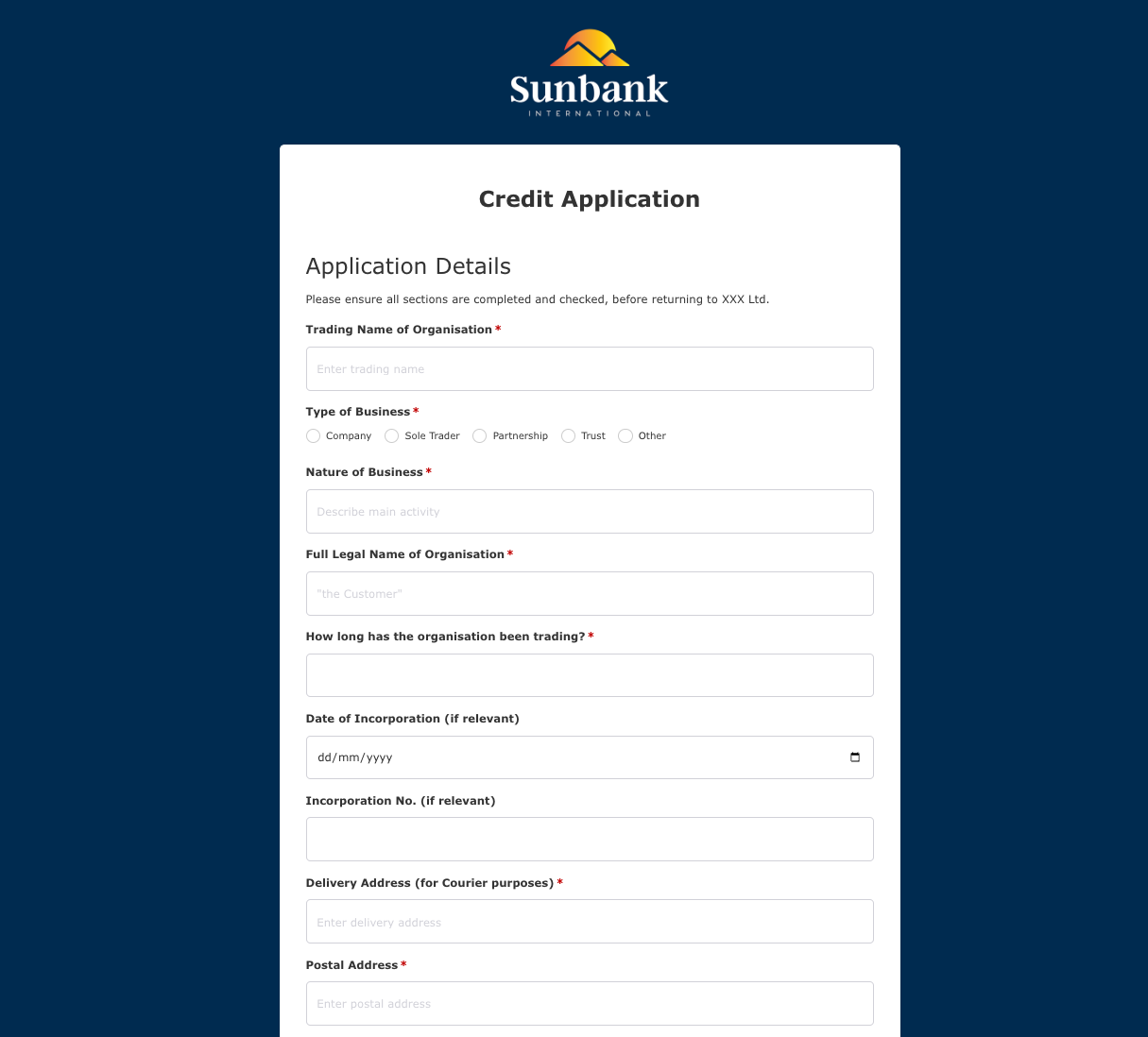

Credit Application Form

Credit Application Form

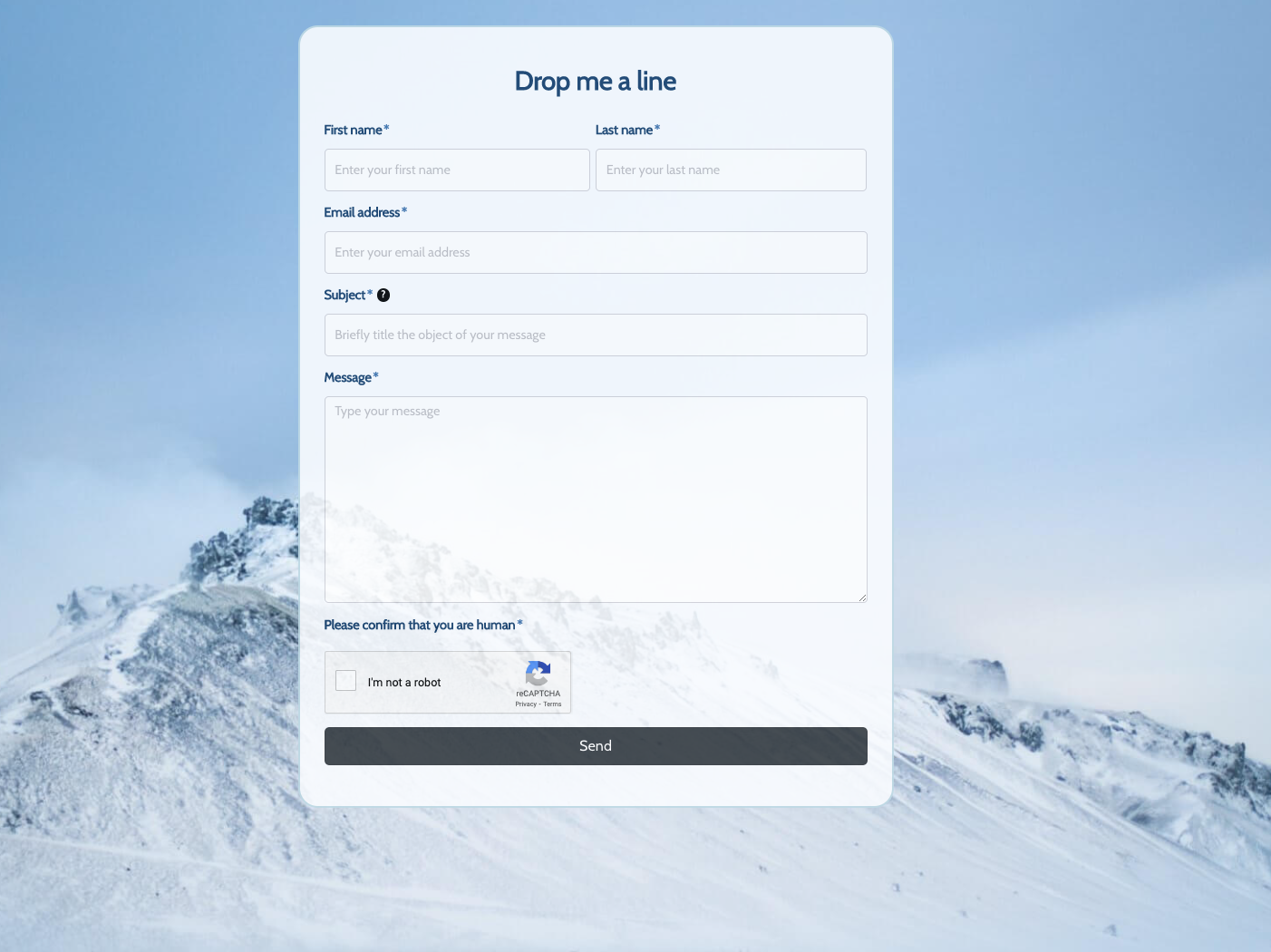

Drop me a line Form

Drop me a line Form

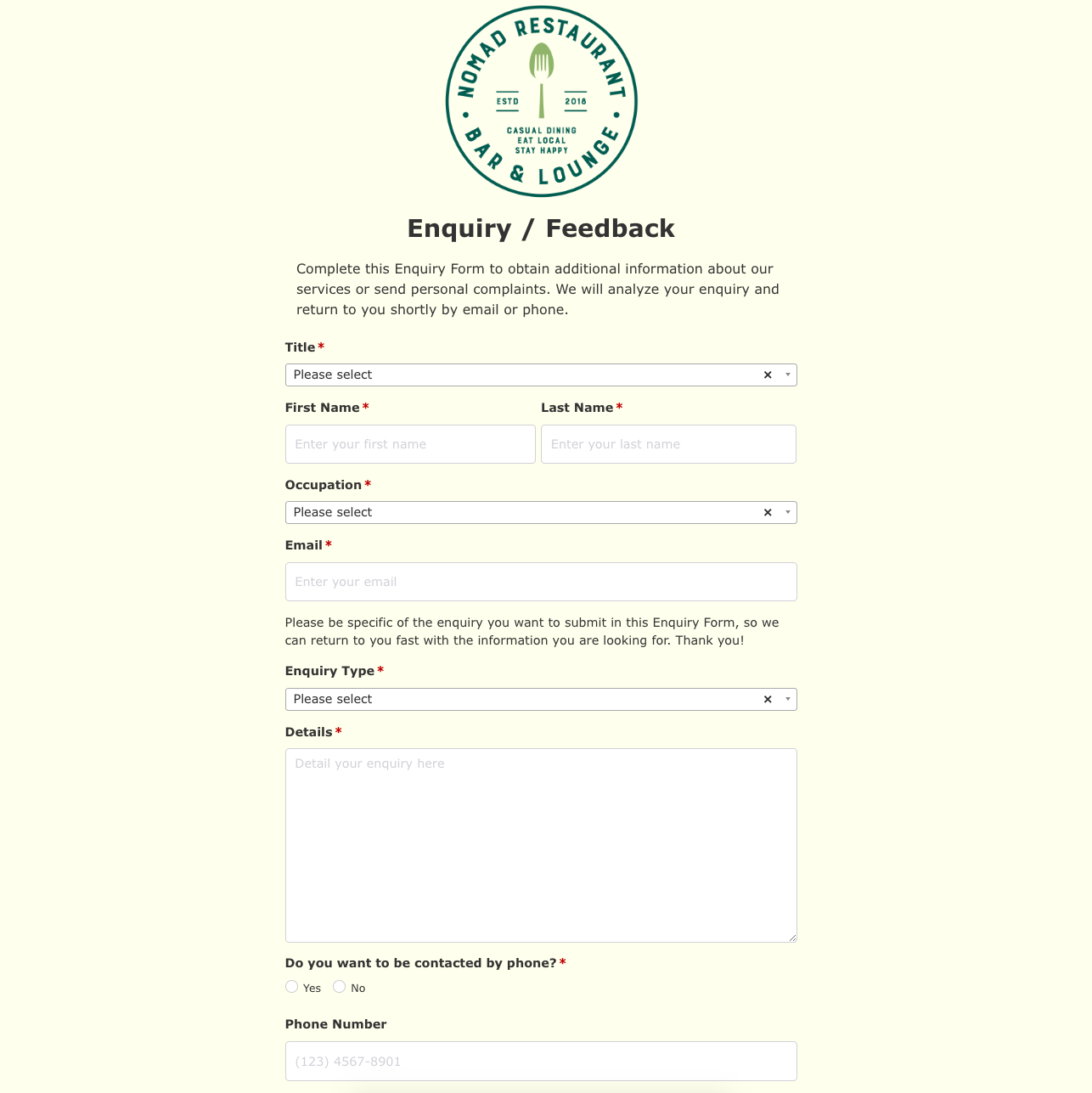

Enquiry / Feedback Form

Enquiry / Feedback Form

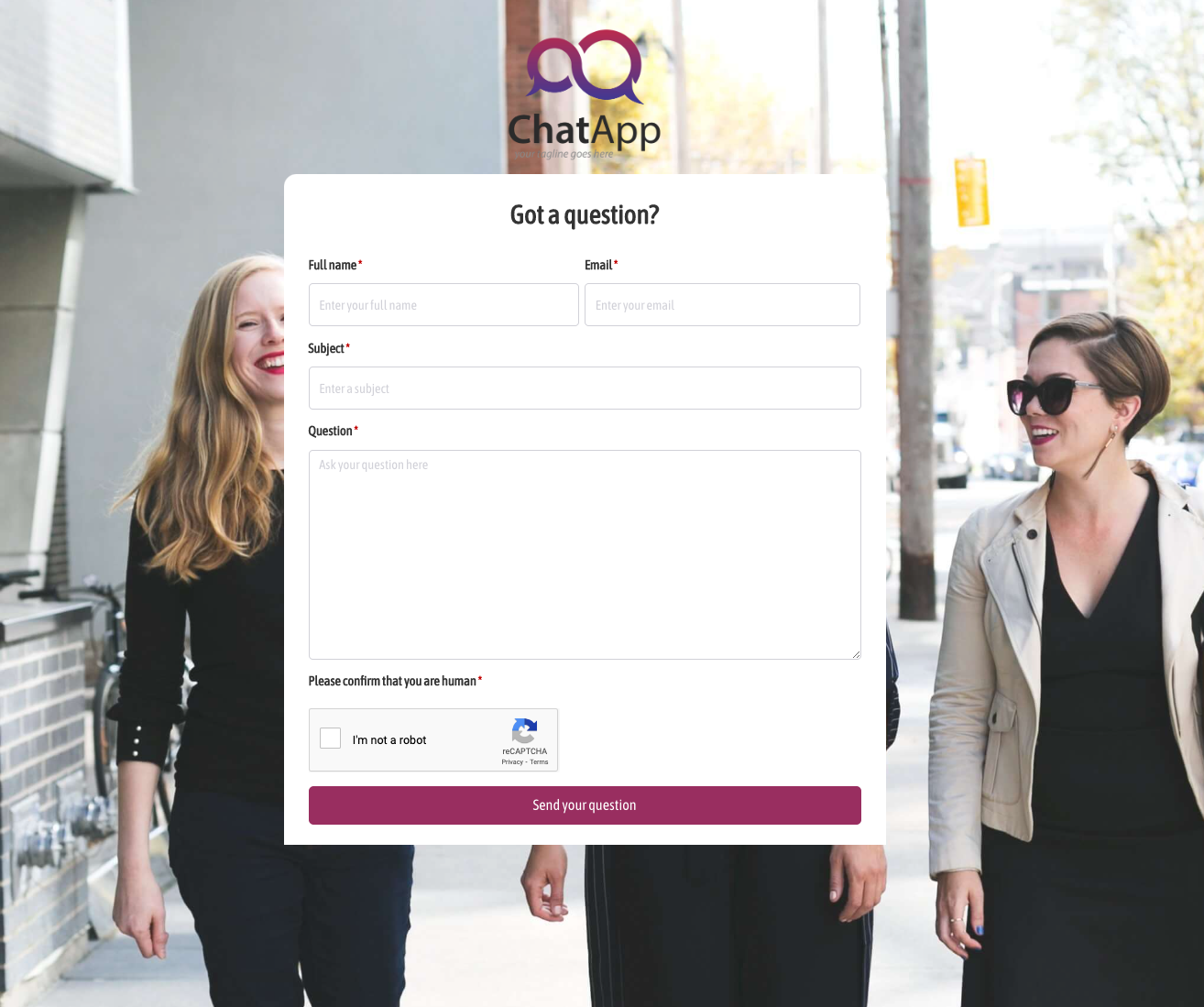

Got a question? Form

Got a question? Form

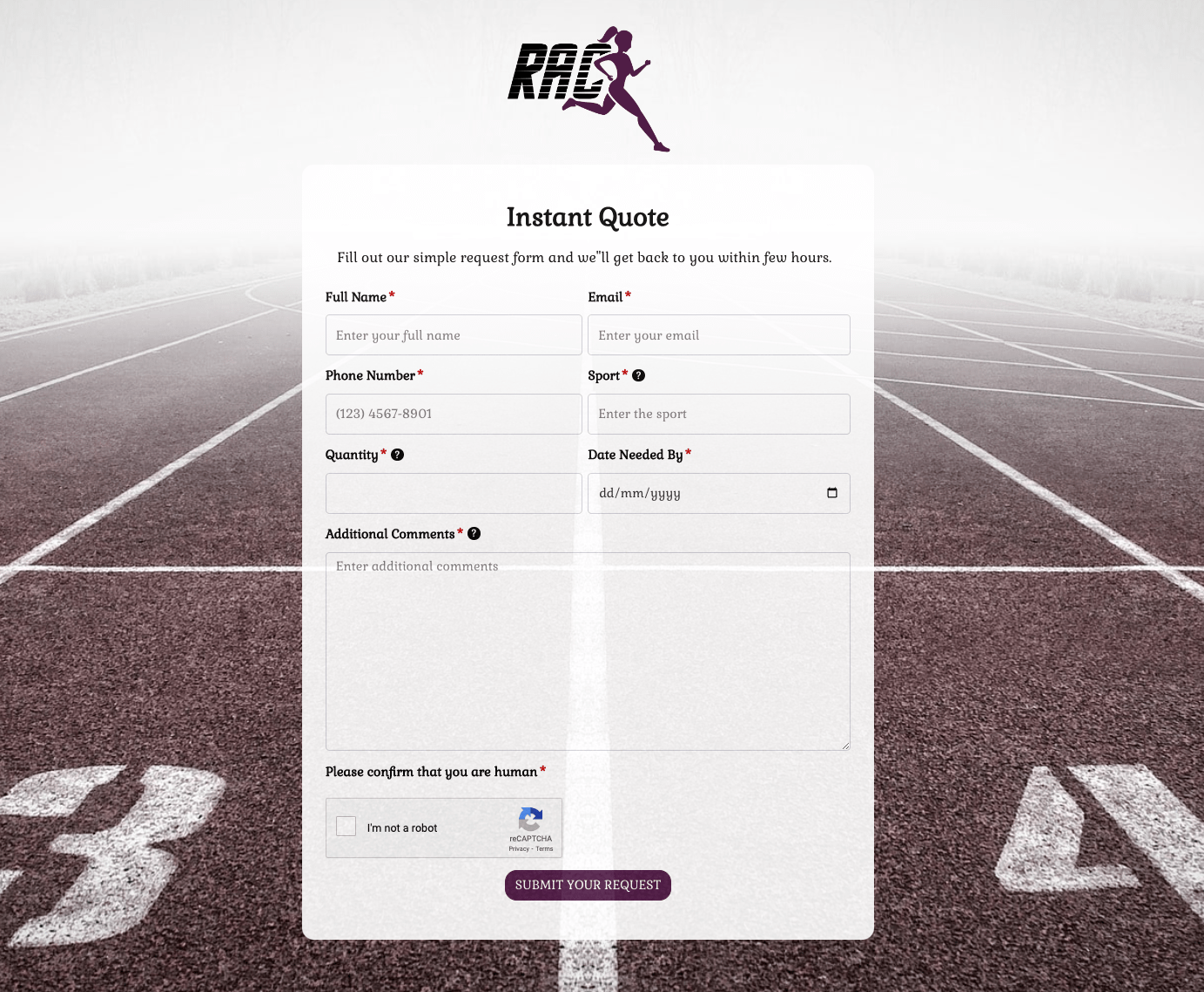

Instant Quote Form

Instant Quote Form

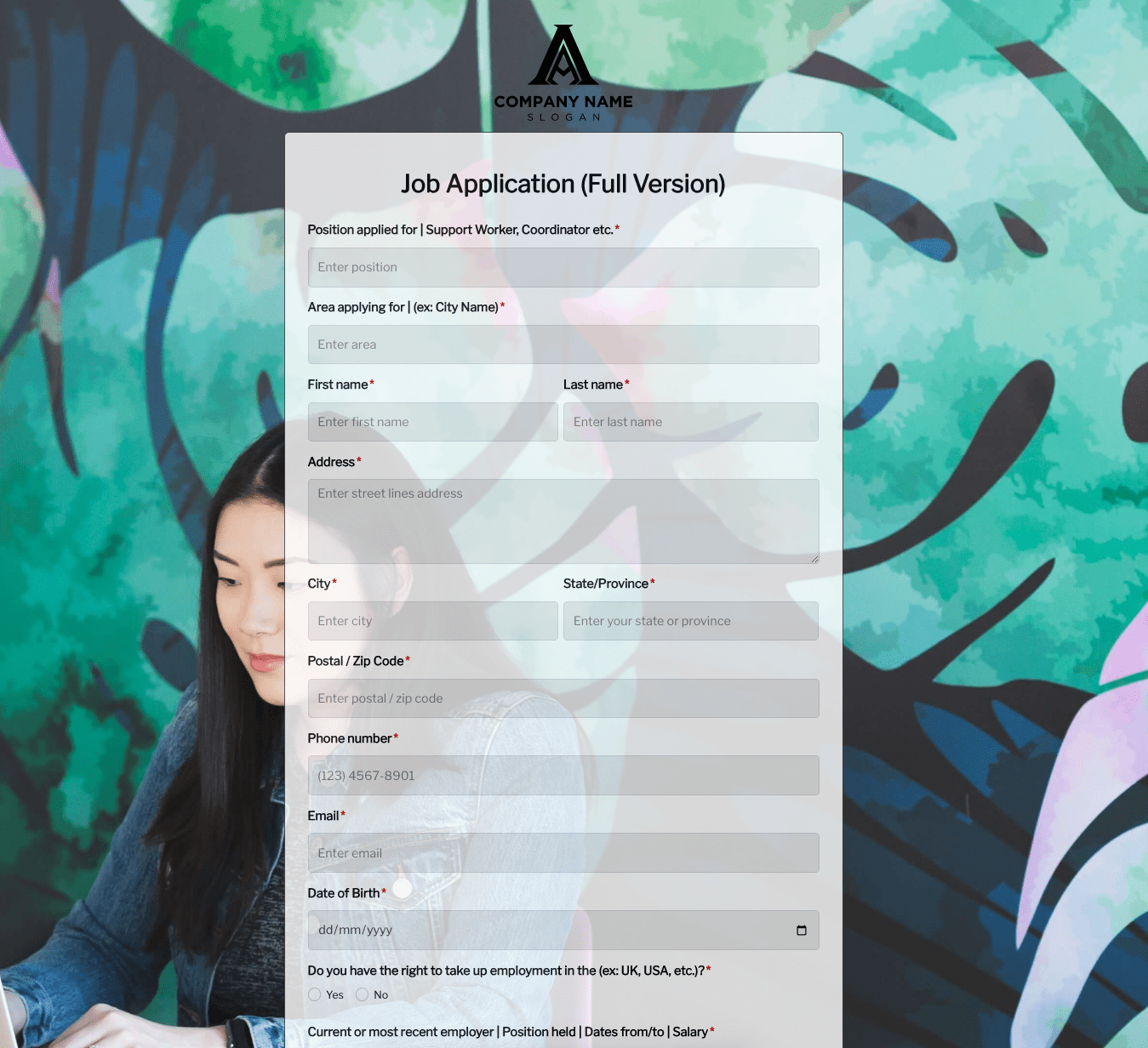

Job Application Form

Job Application Form

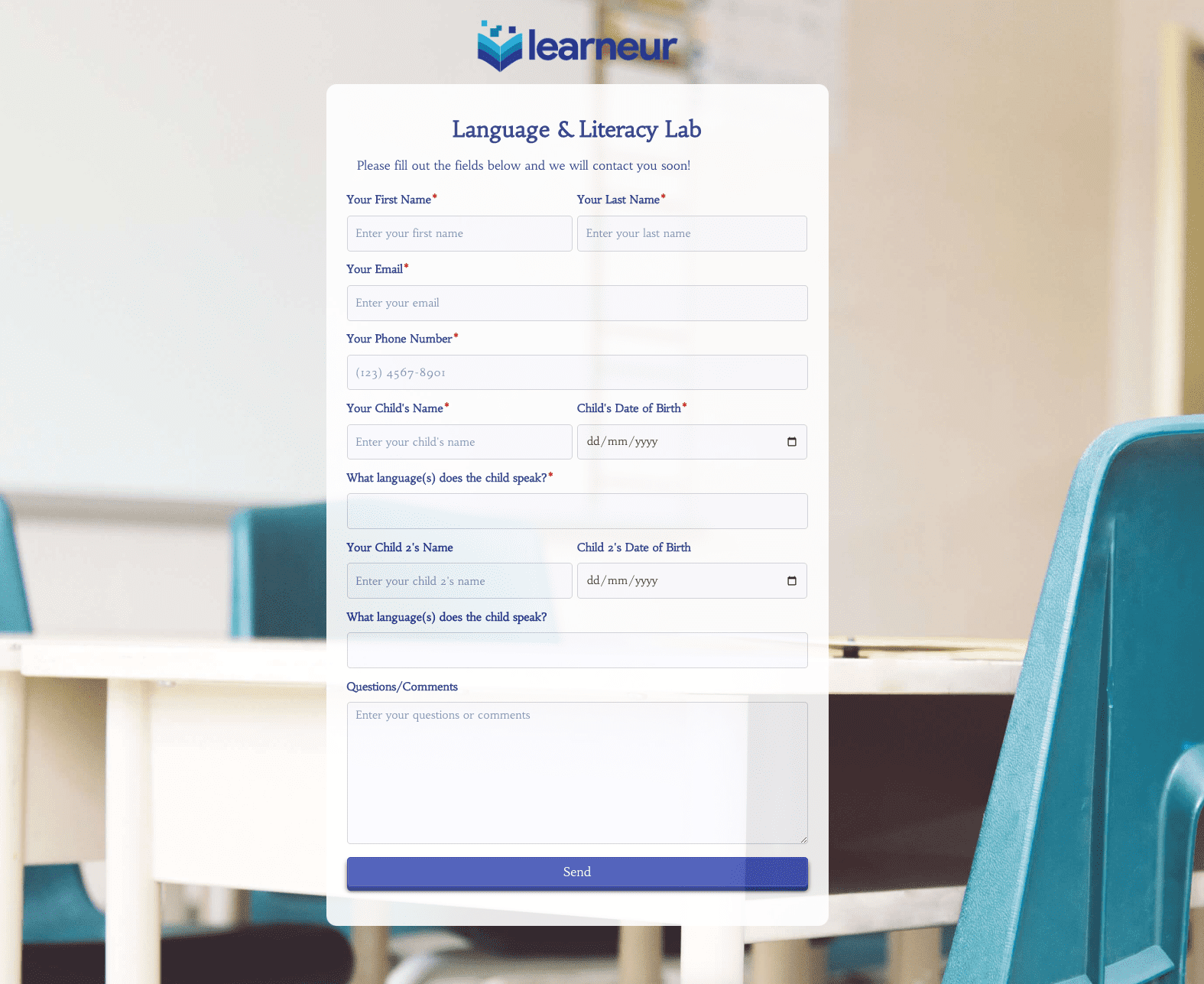

Language & Literacy Lab Form

Language & Literacy Lab Form

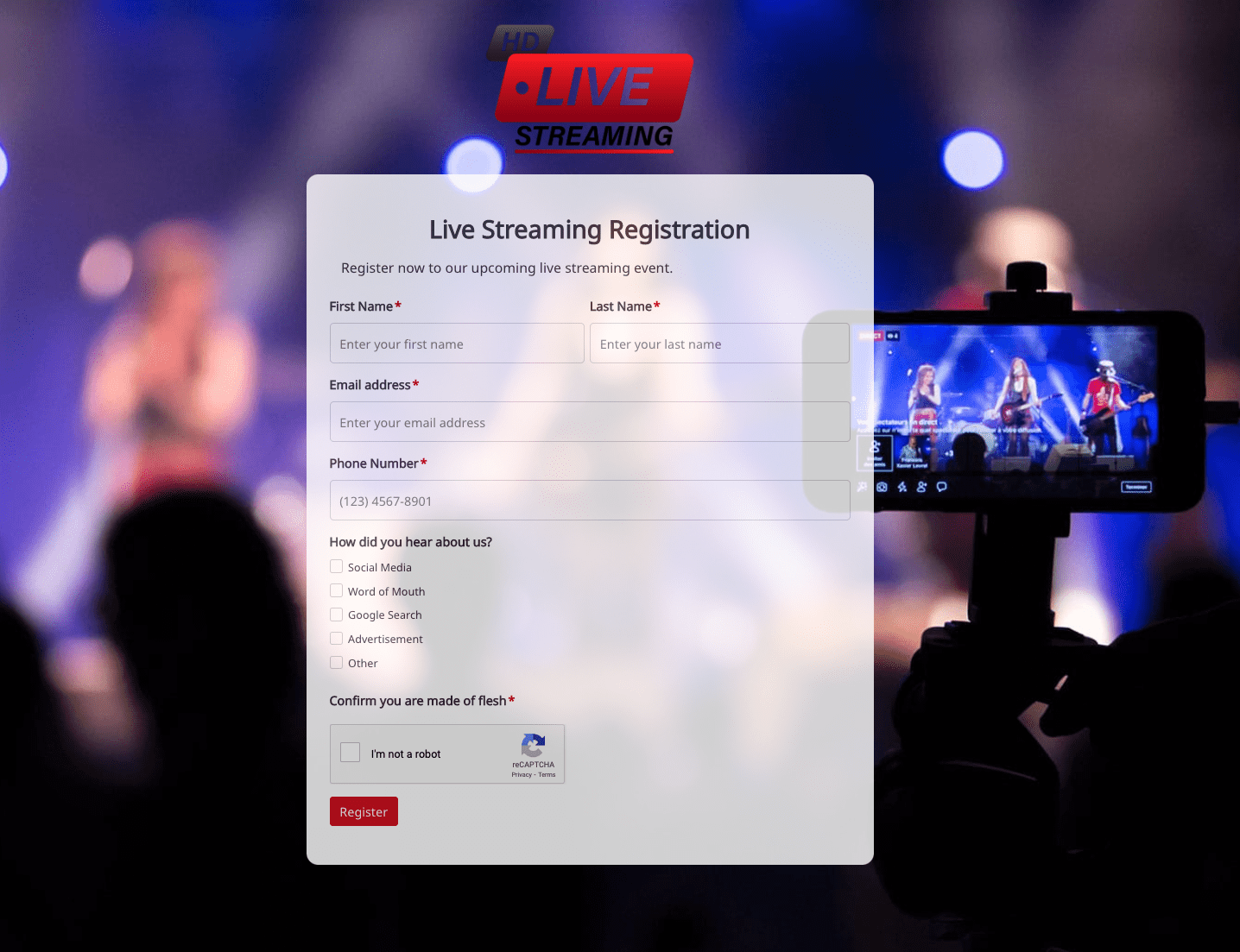

Live Streaming Registration Form

Live Streaming Registration Form

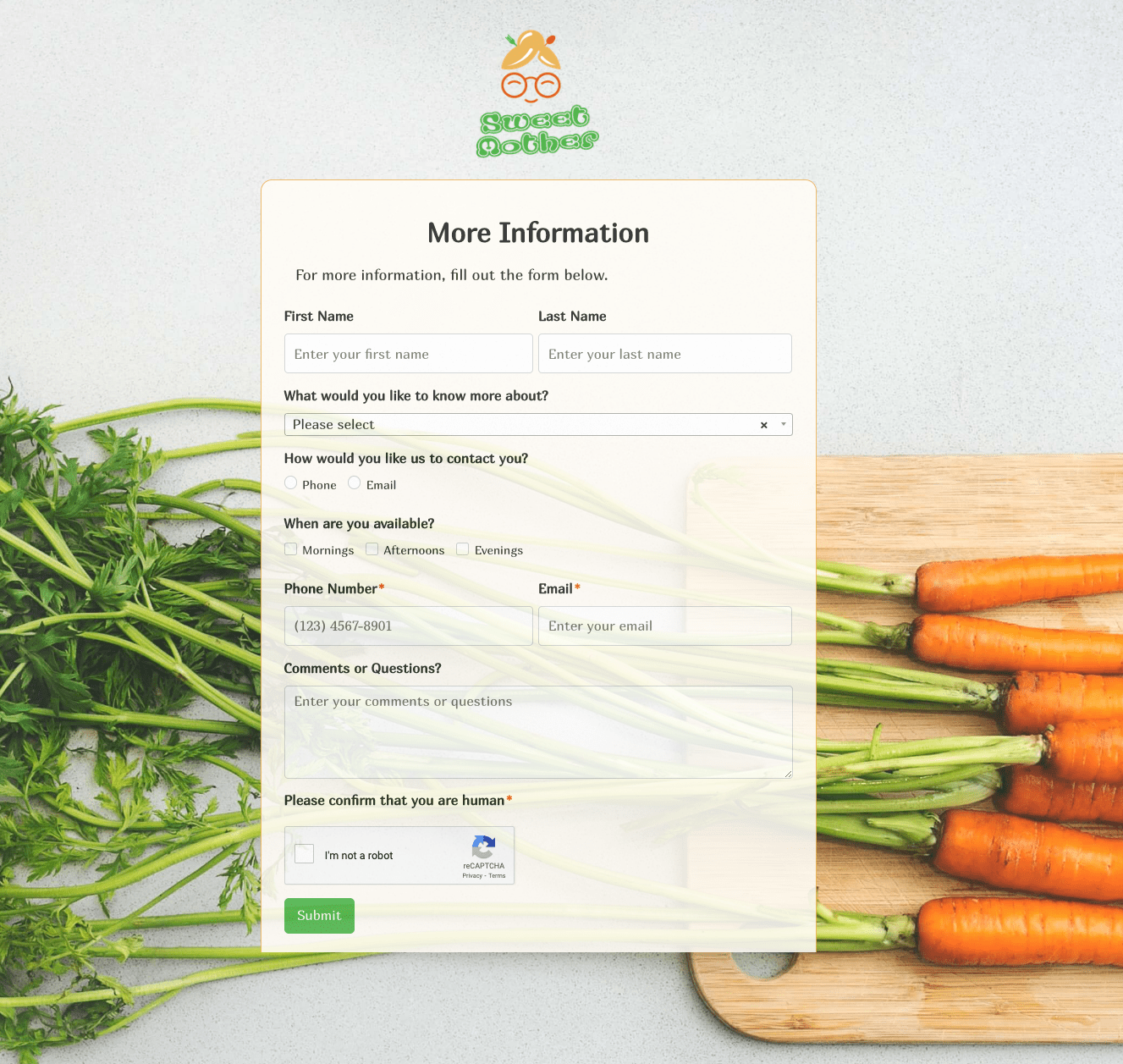

More Information Form

More Information Form

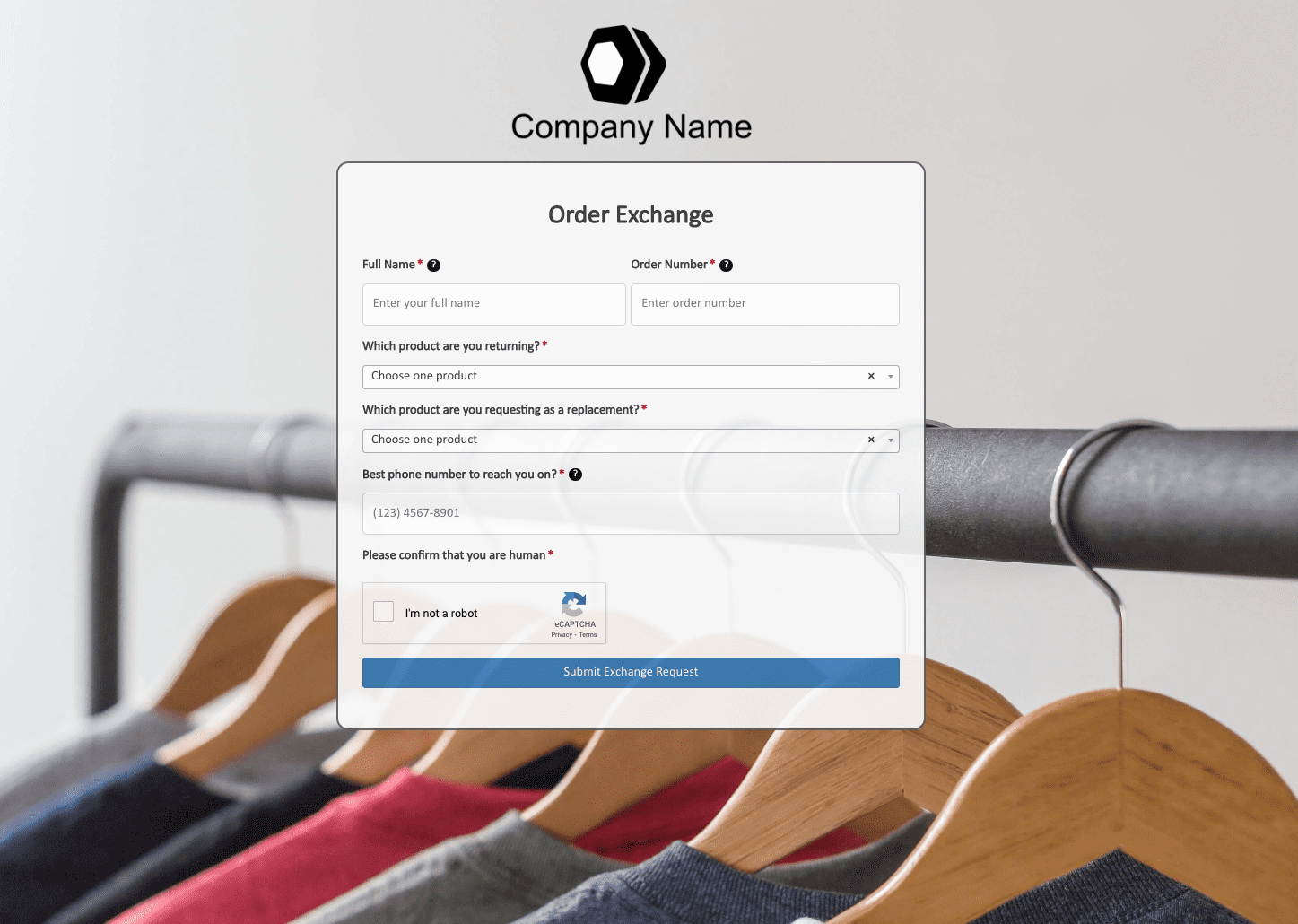

Order Exchange Form

Order Exchange Form

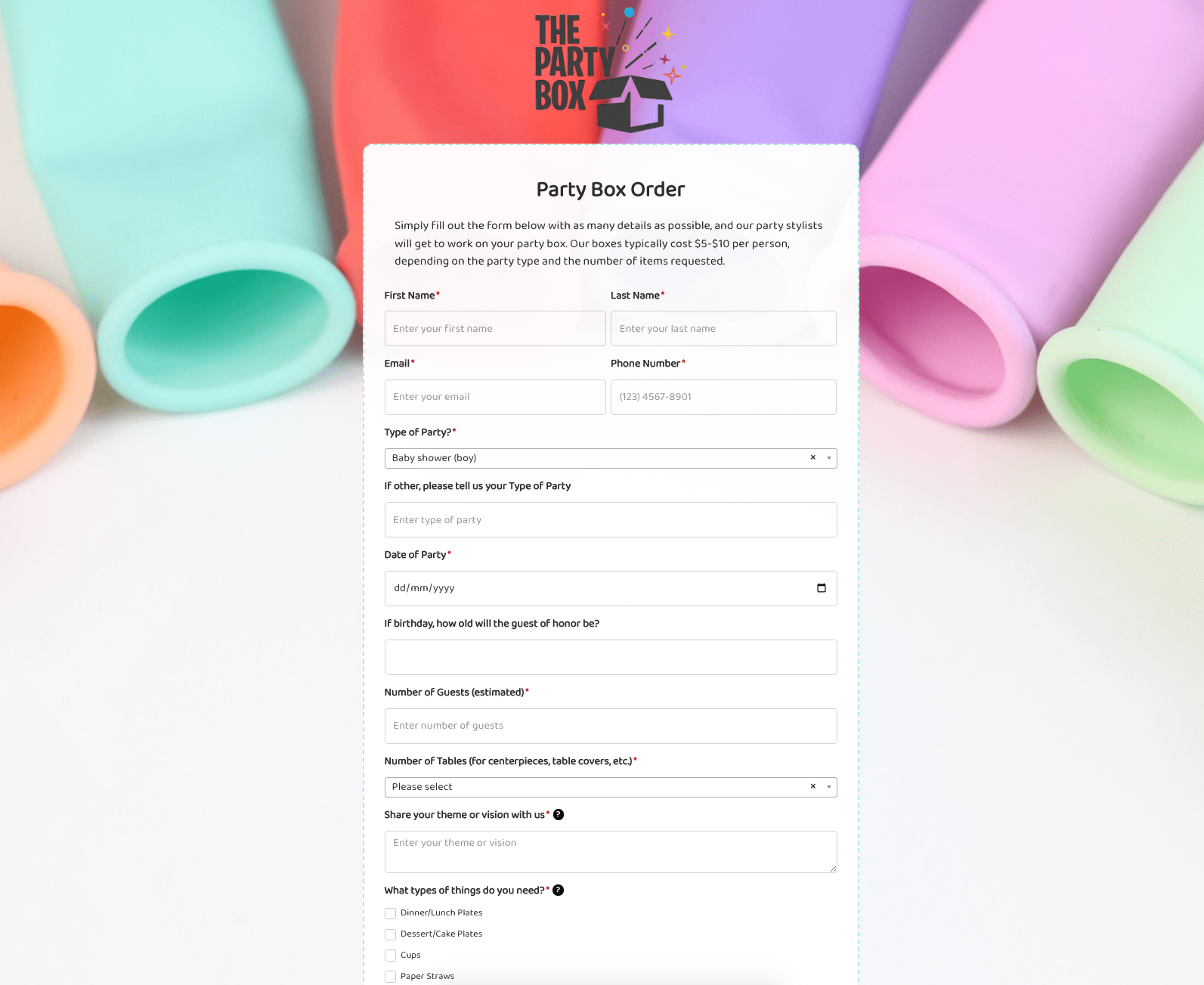

Party Box Order Form

Party Box Order Form

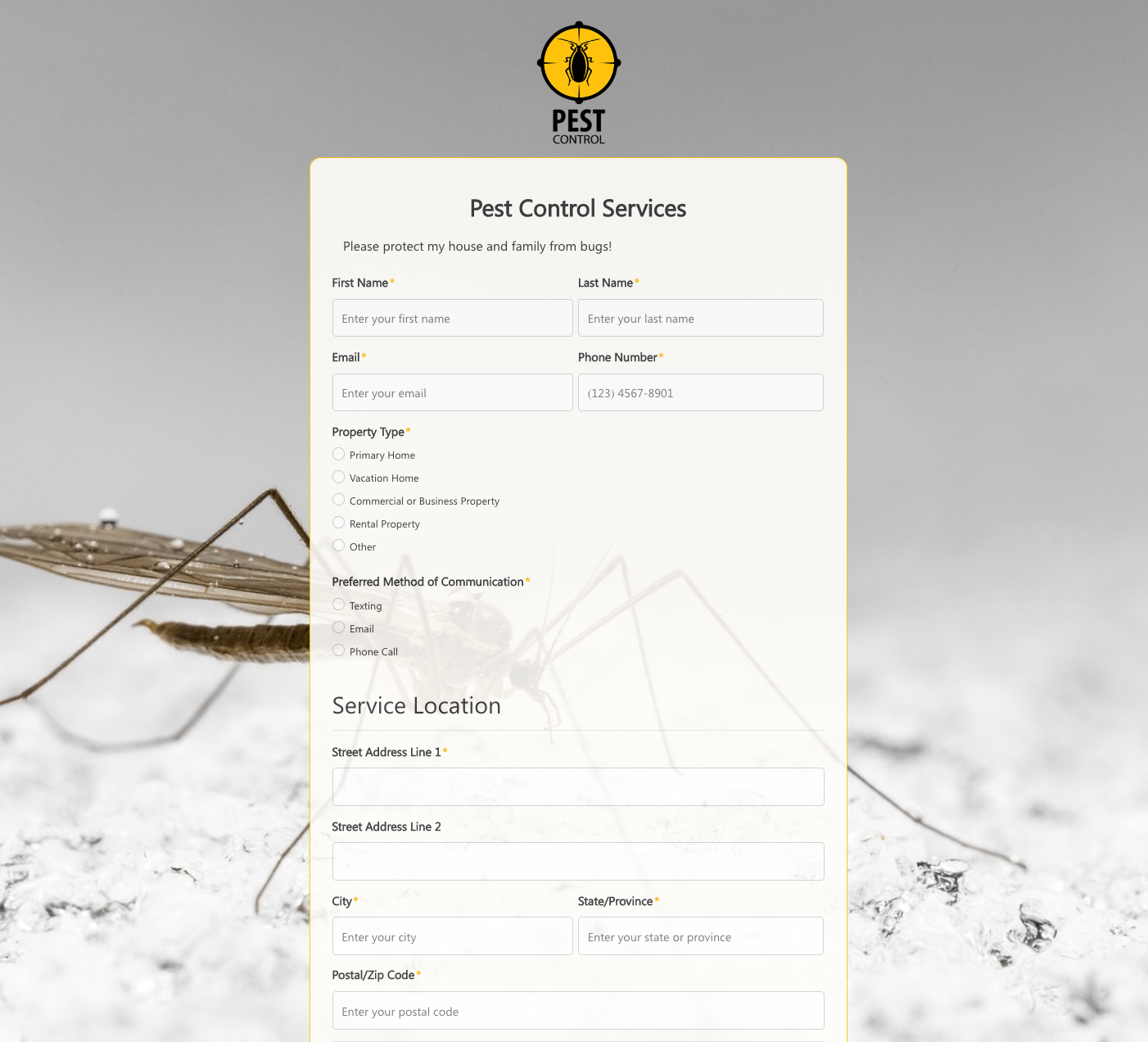

Pest Control Services Form

Pest Control Services Form

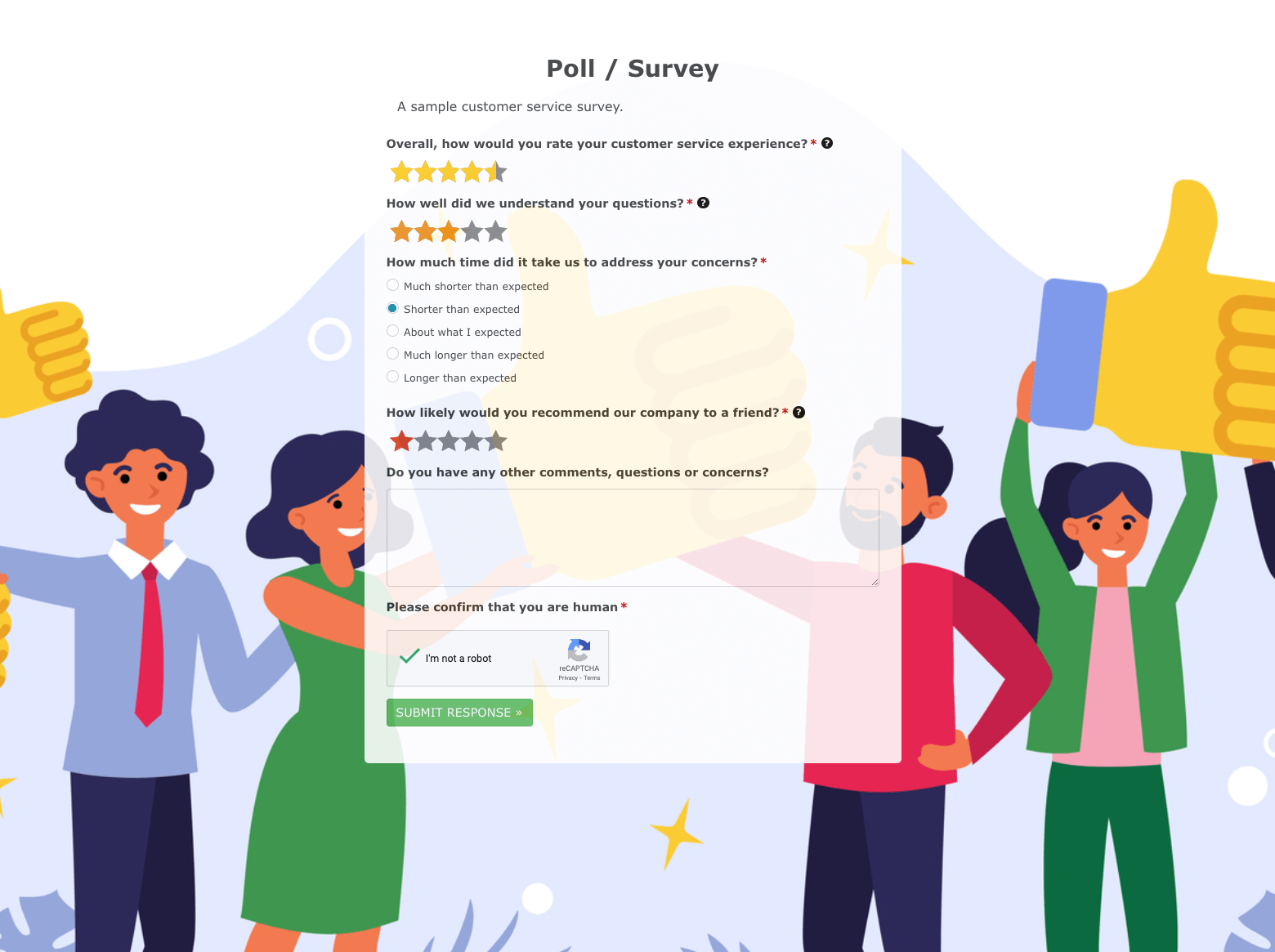

Poll / Survey Form

Poll / Survey Form

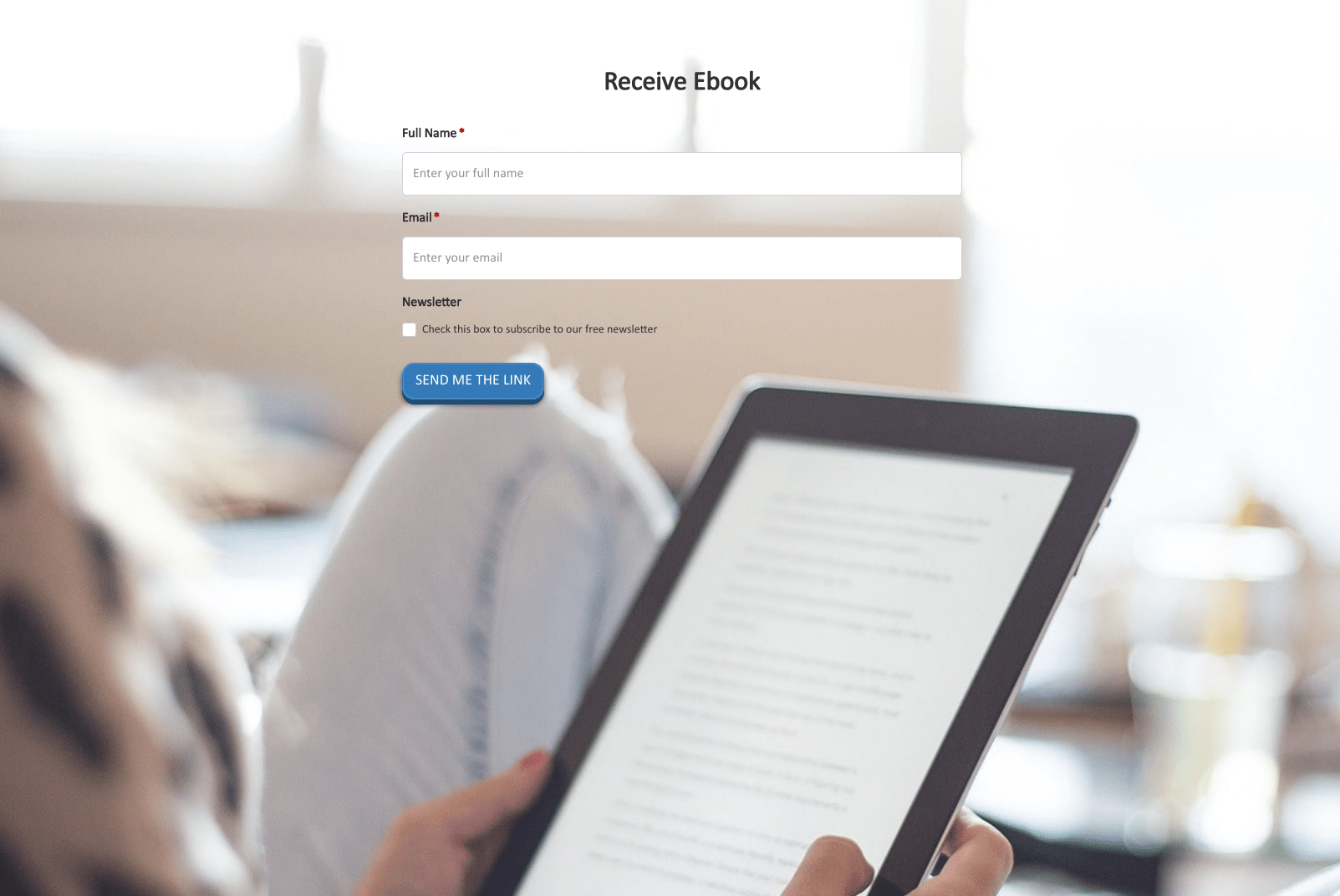

Receive Ebook Form

Receive Ebook Form

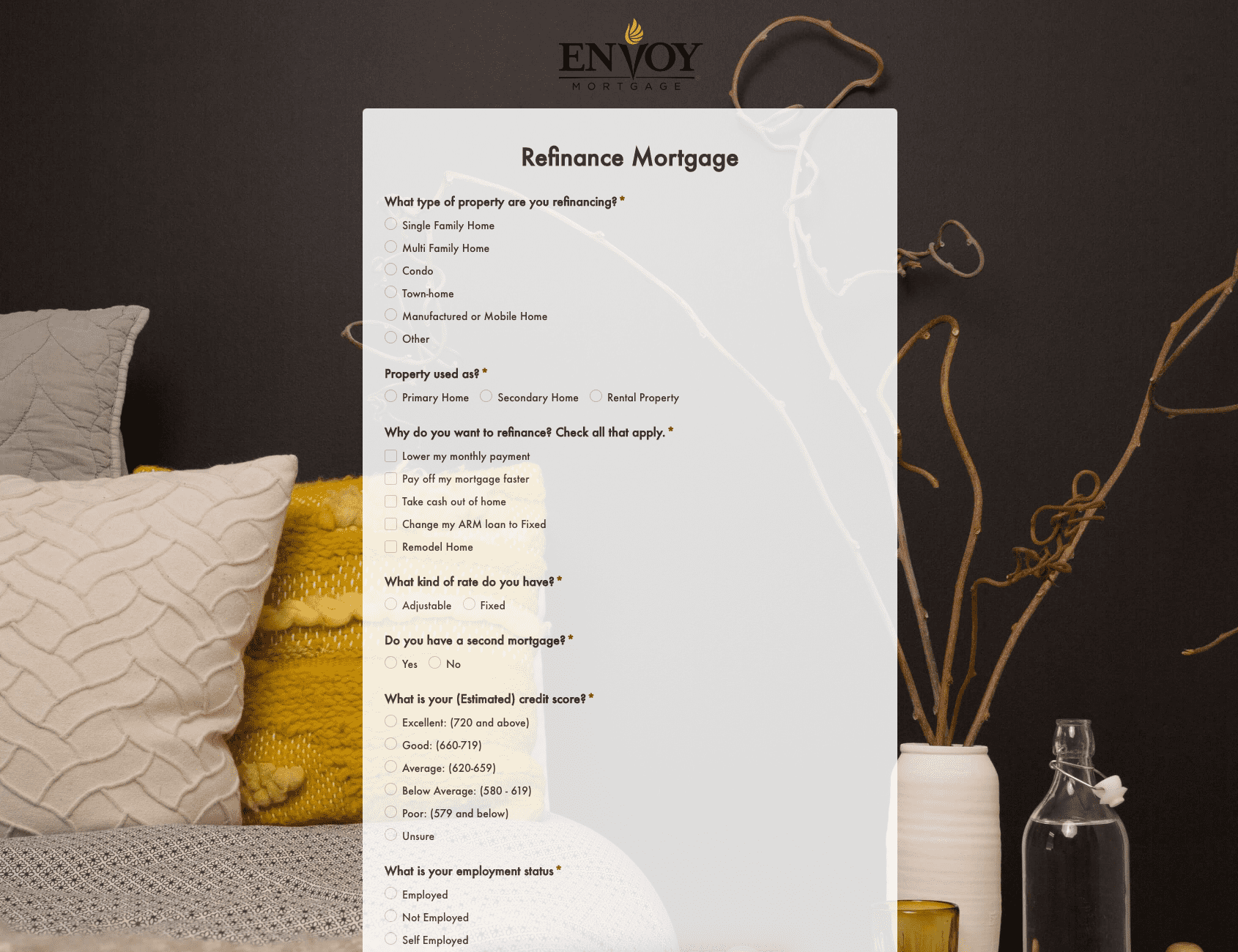

Refinance Mortgage Form

Refinance Mortgage Form

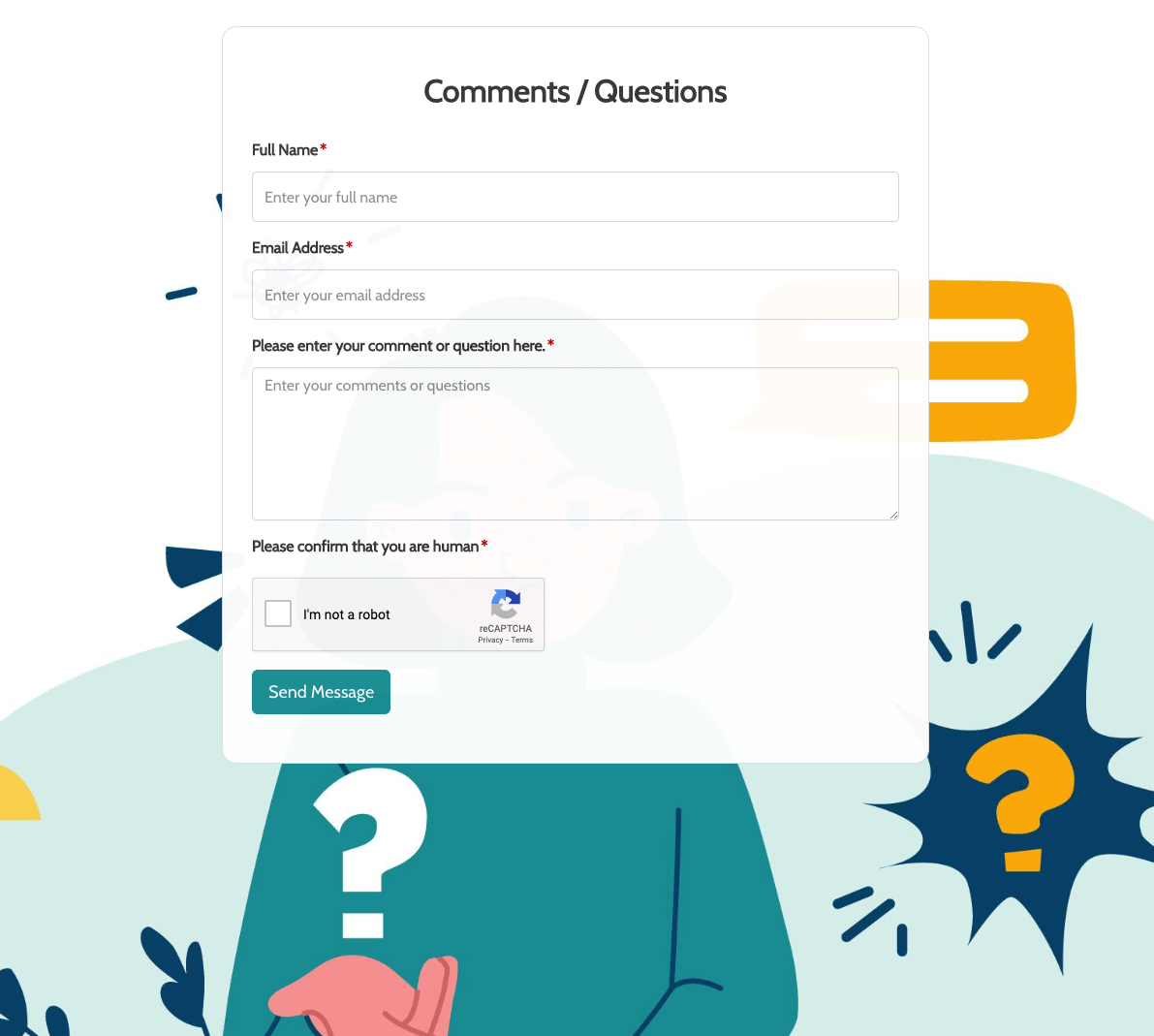

Remarks / Questions Form

Remarks / Questions Form

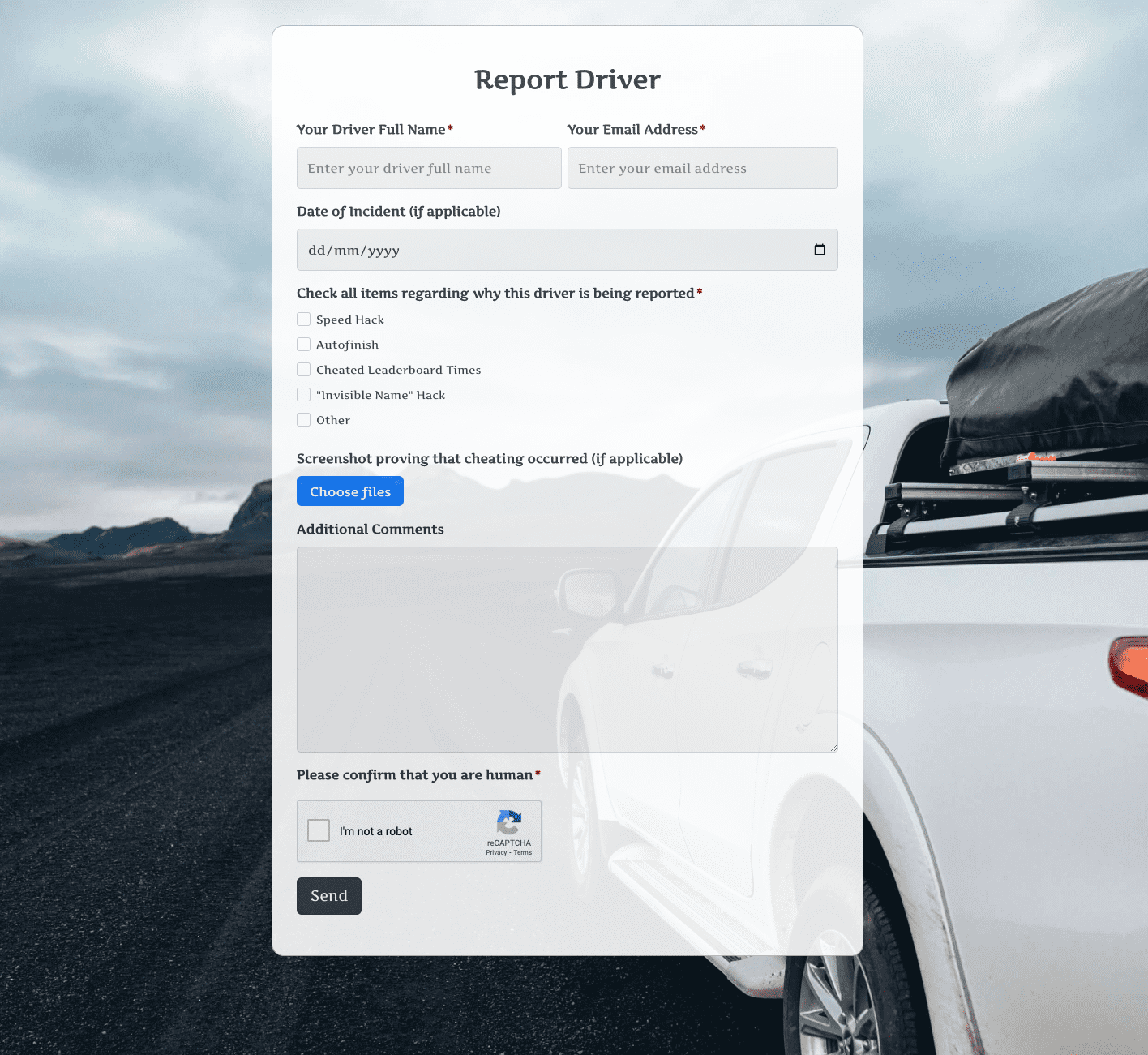

Report Driver Form

Report Driver Form

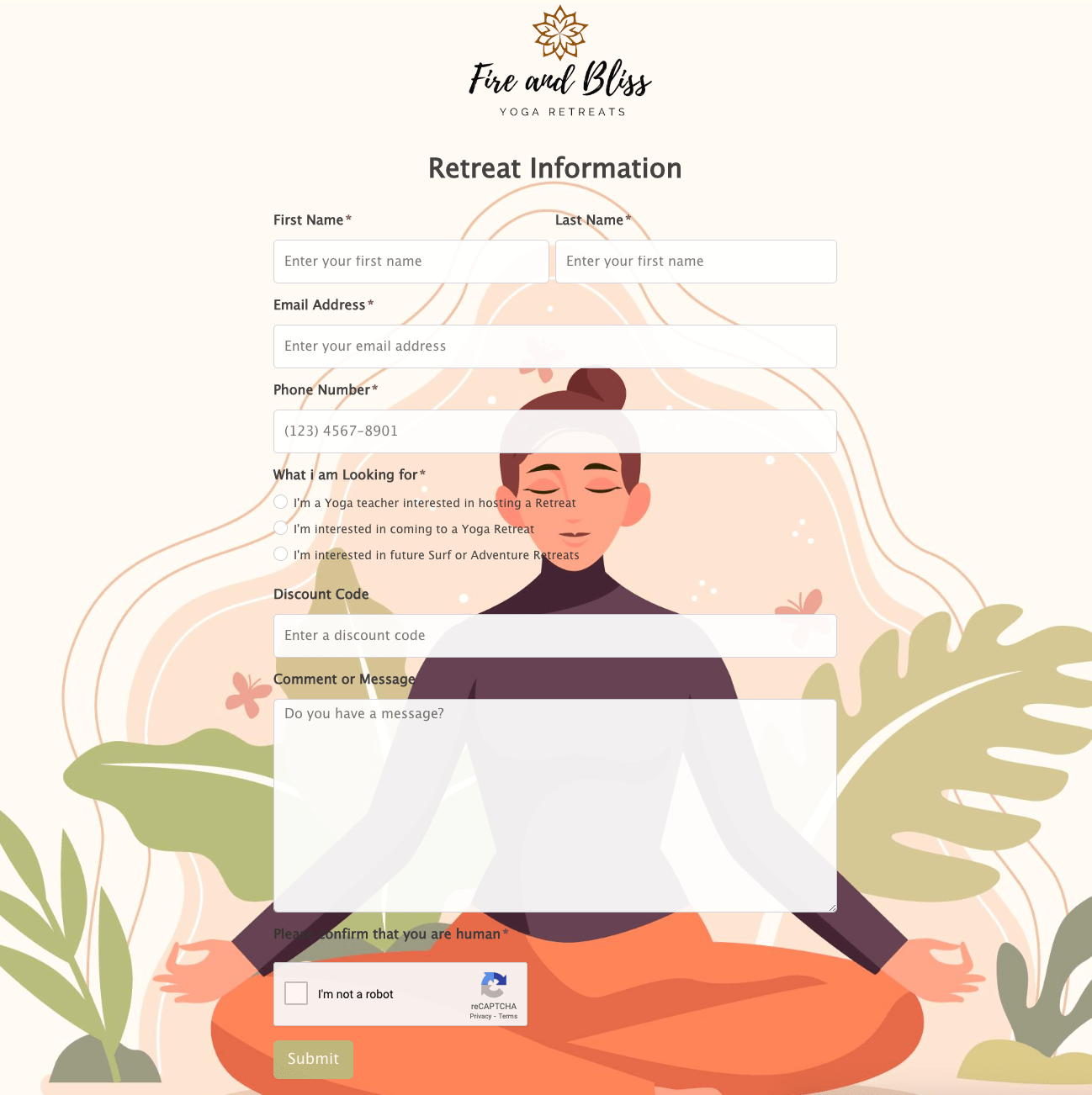

Retreat Information Form

Retreat Information Form

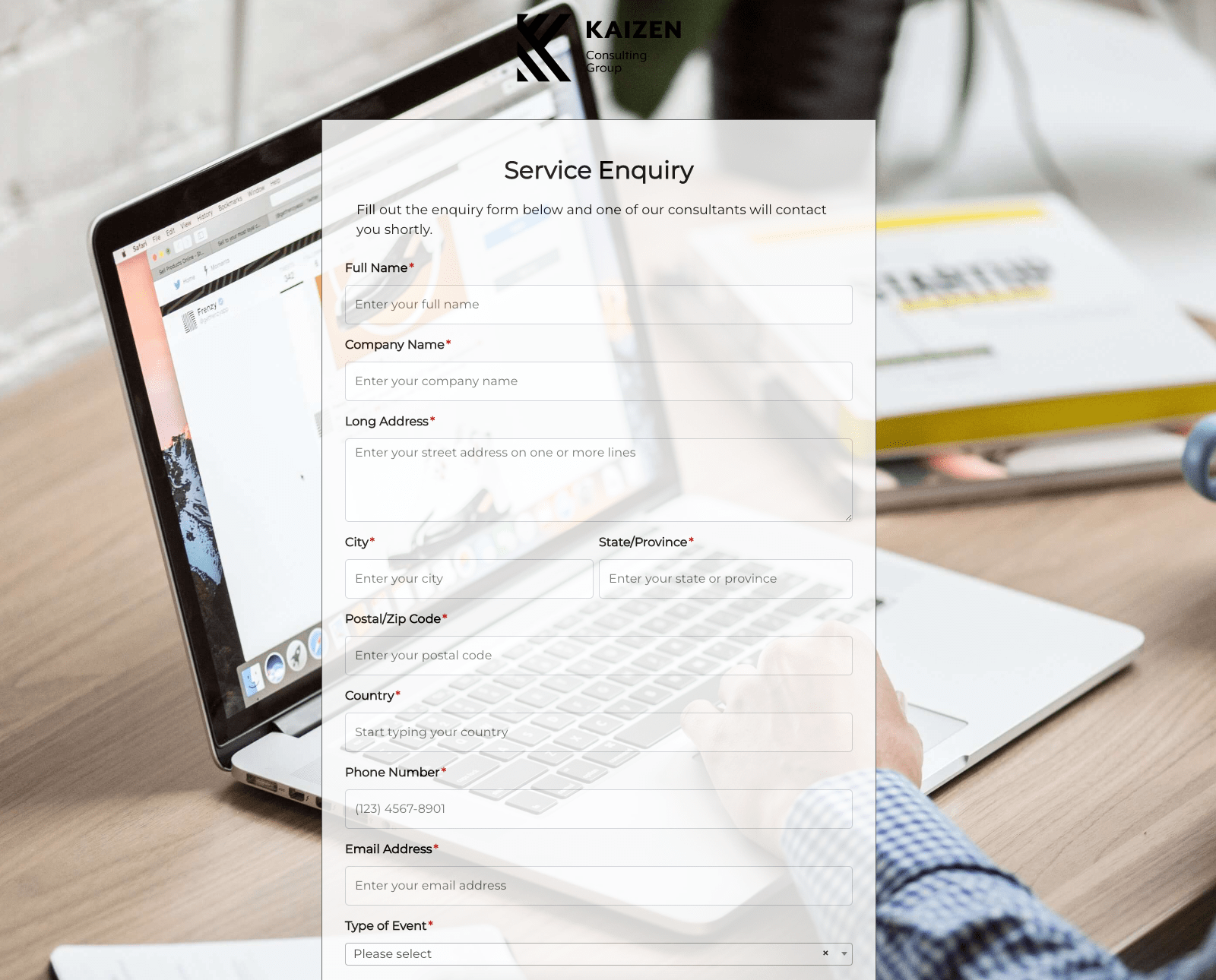

Service Enquiry Form

Service Enquiry Form

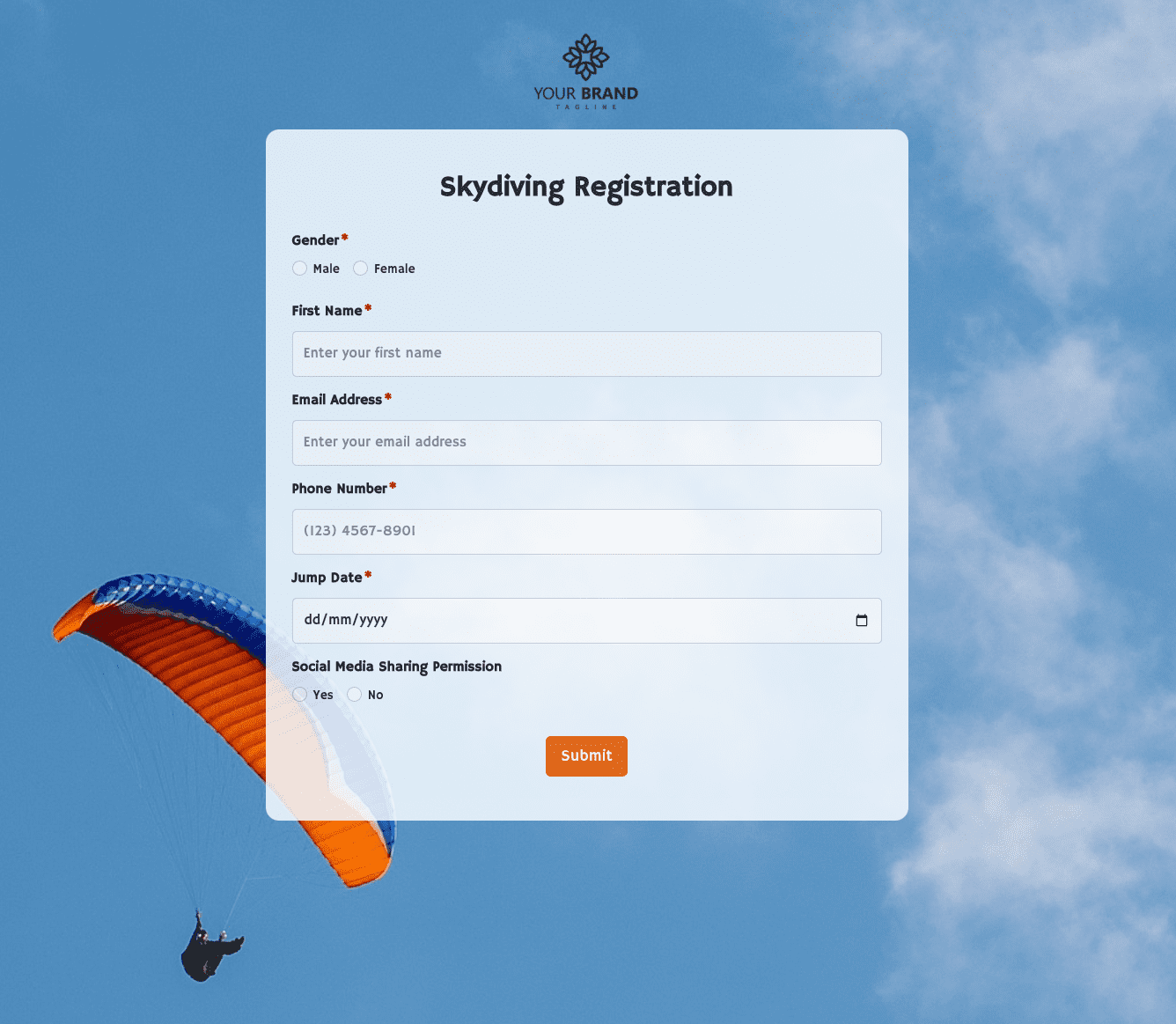

Skydiving Registration Form

Skydiving Registration Form

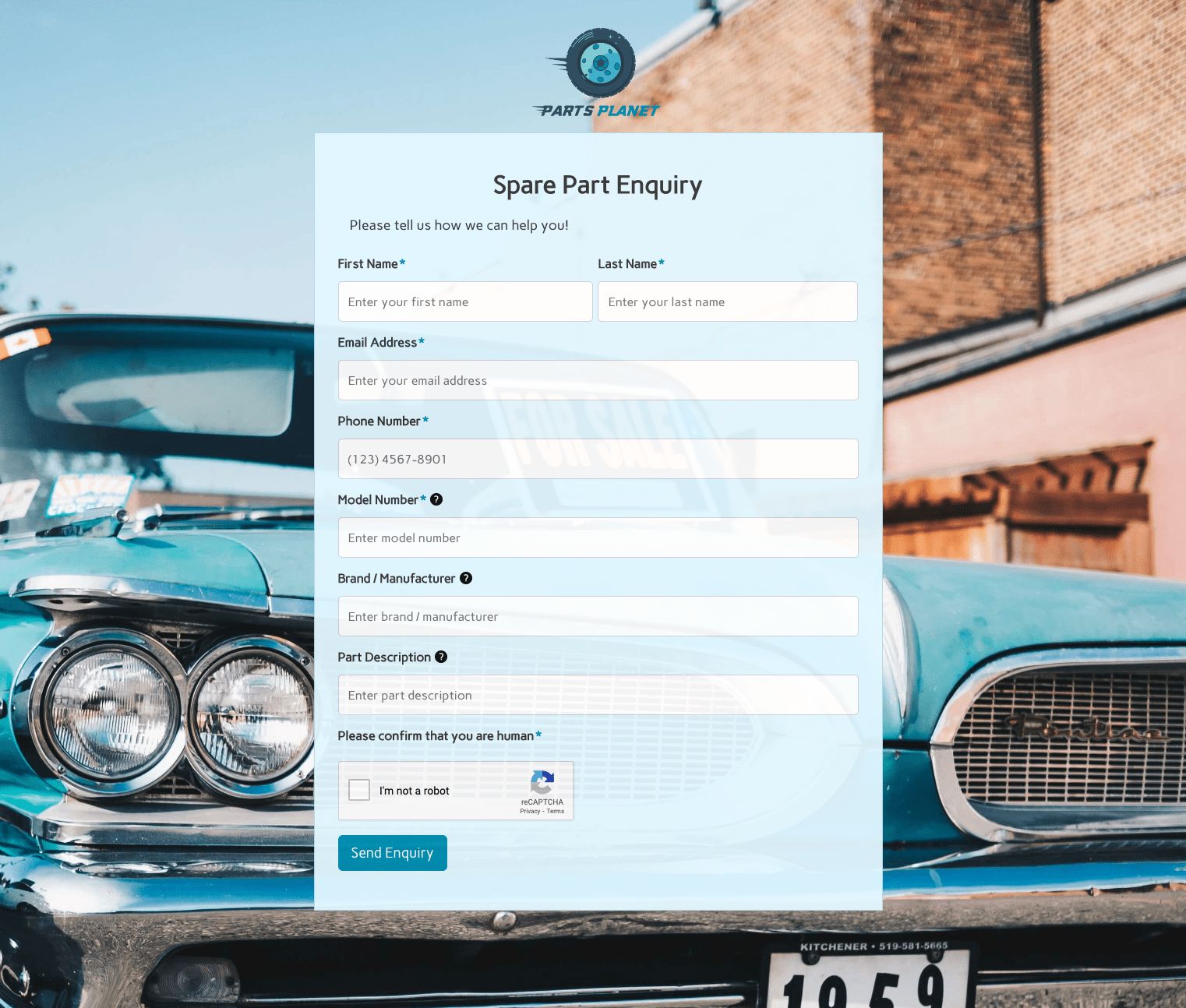

Spare Part Enquiry Form

Spare Part Enquiry Form

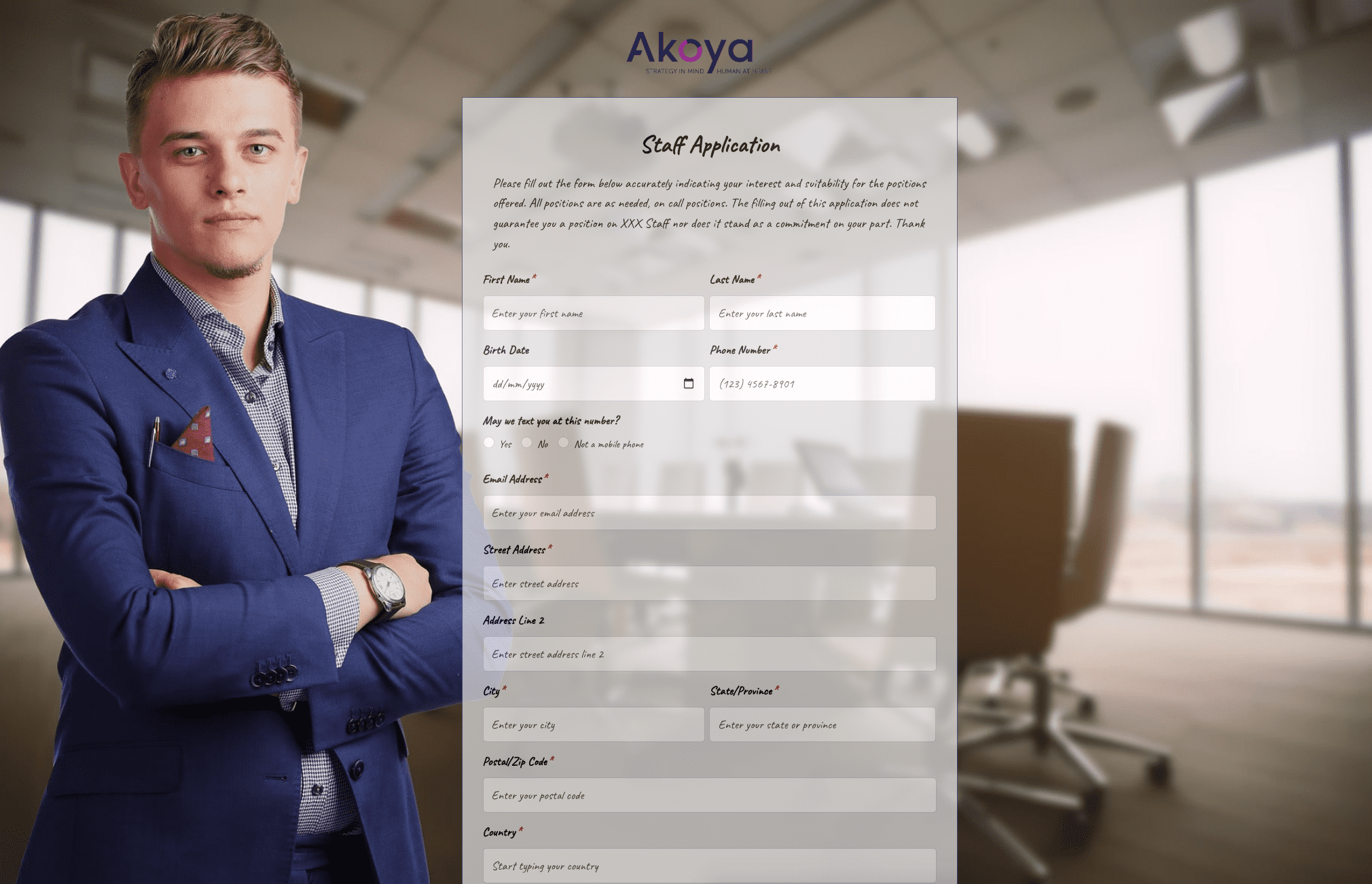

Staff Application Form

Staff Application Form

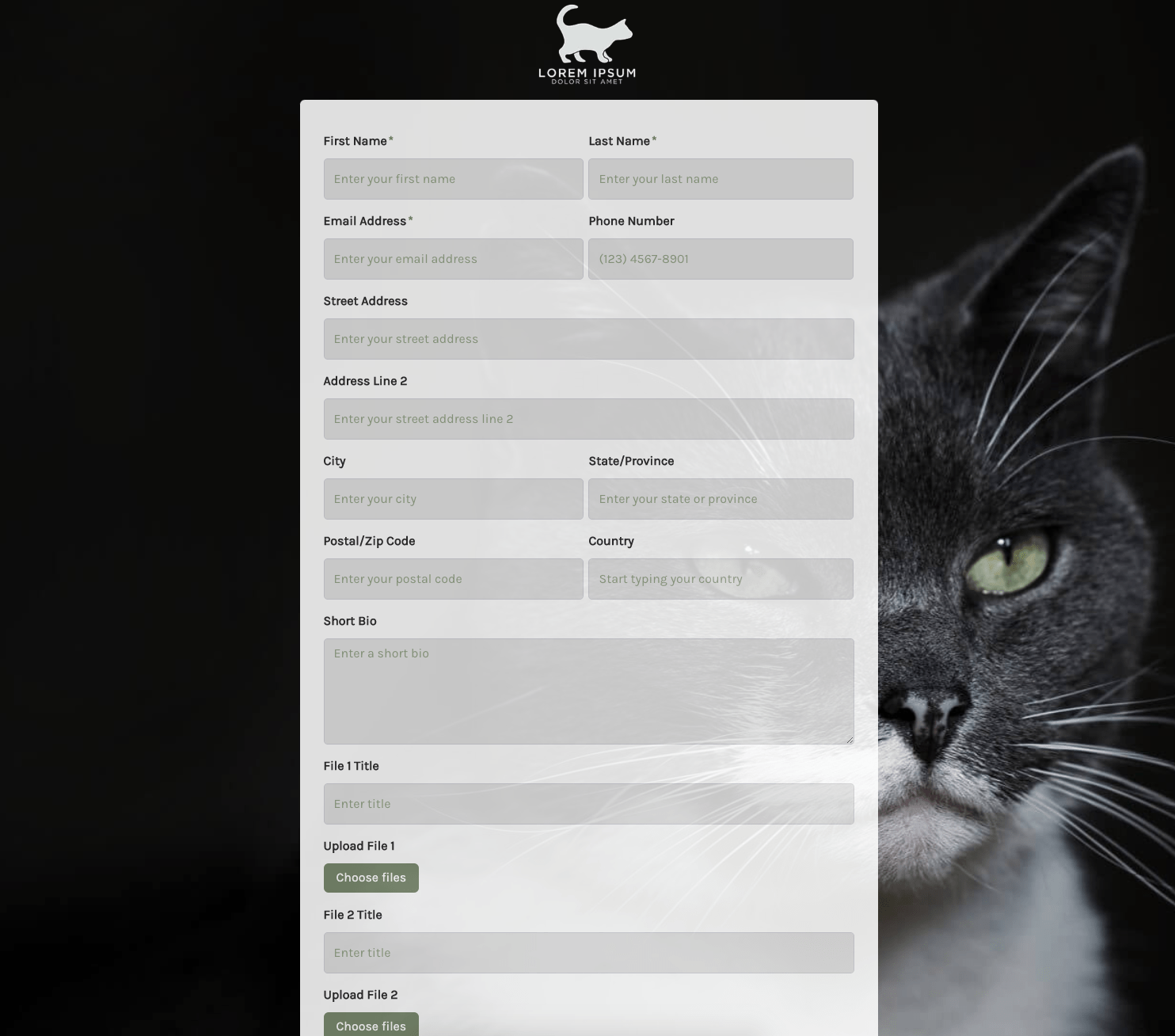

Submission Form

Submission Form

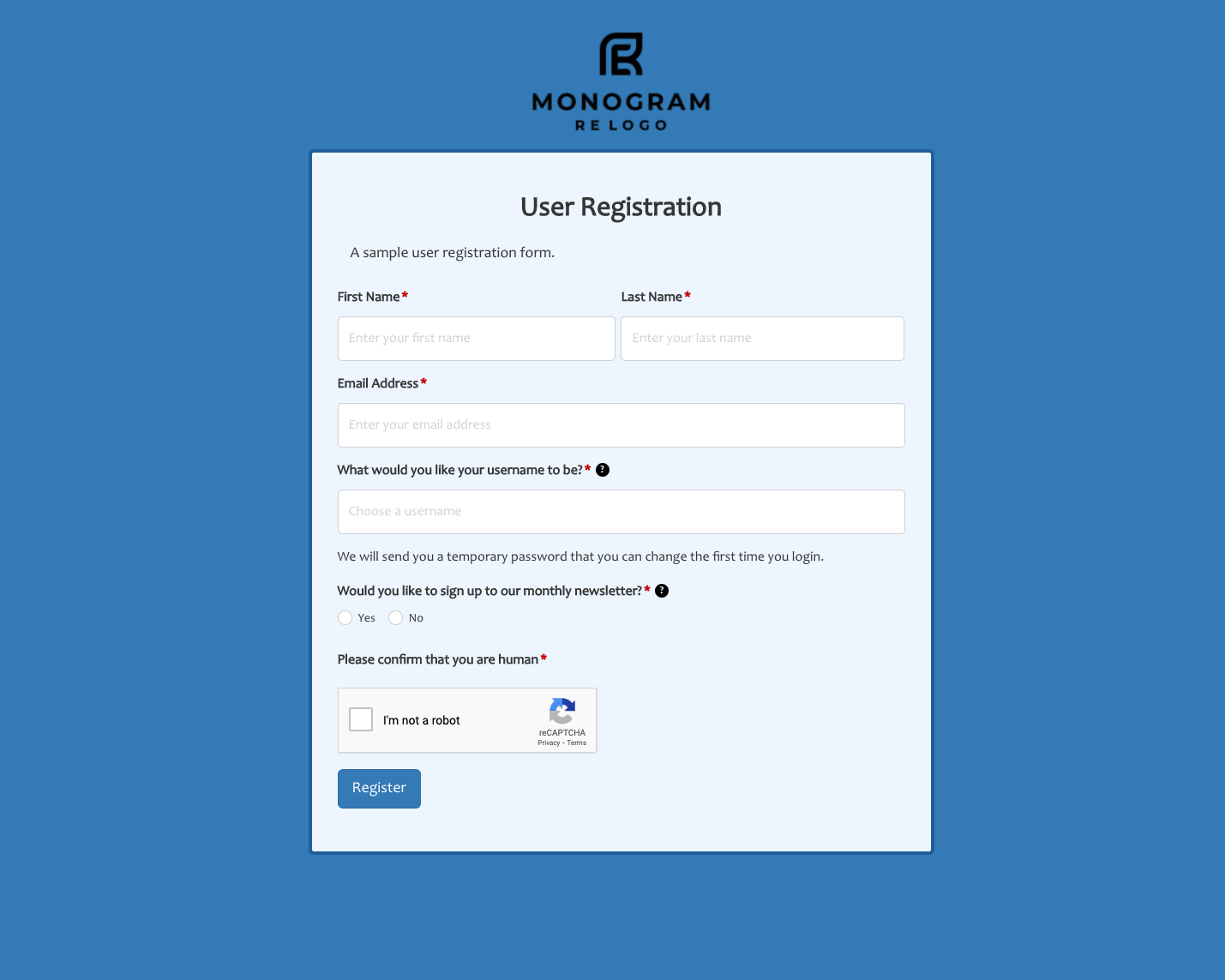

User Registration Form

User Registration Form

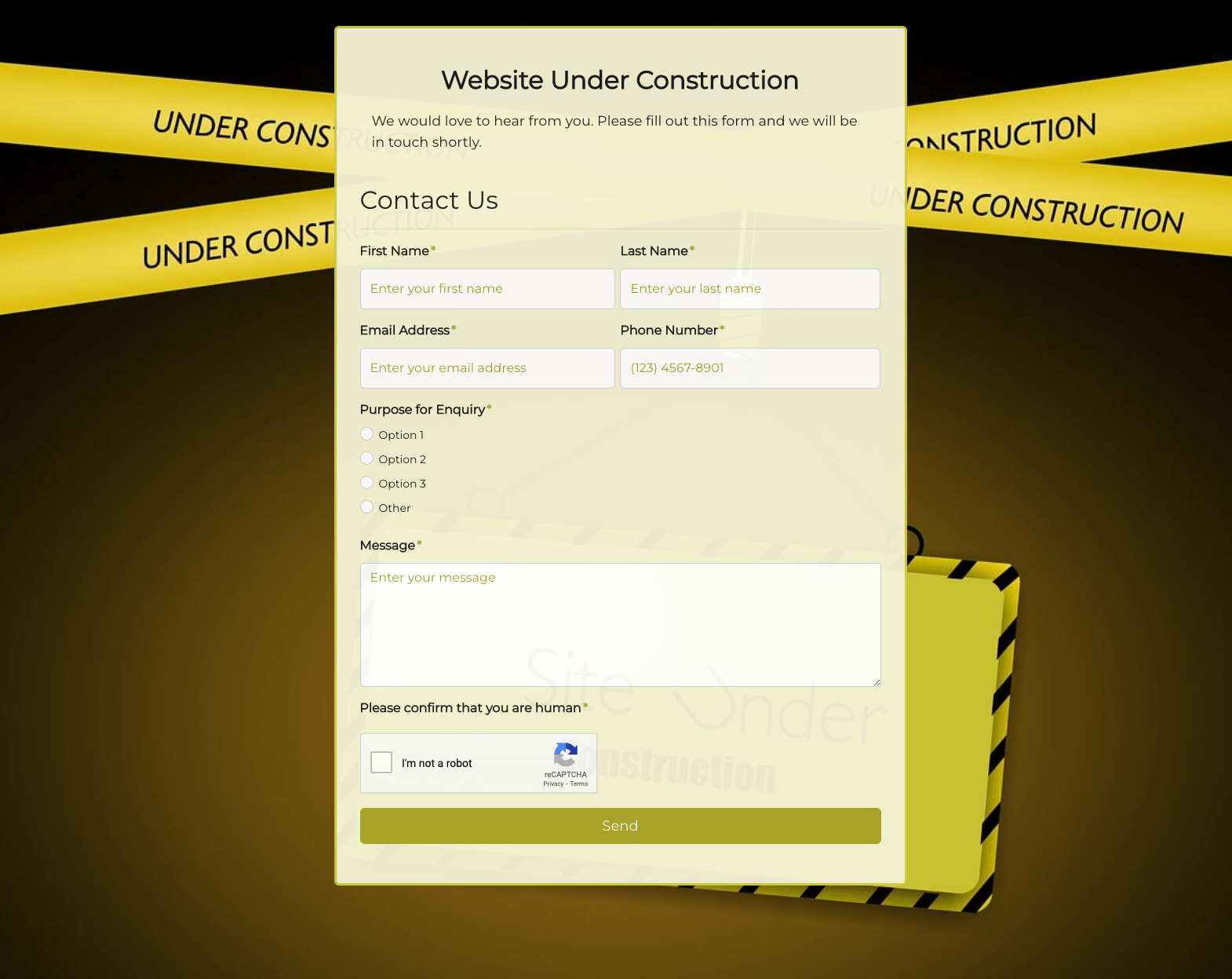

Website Under Construction Form

Website Under Construction Form

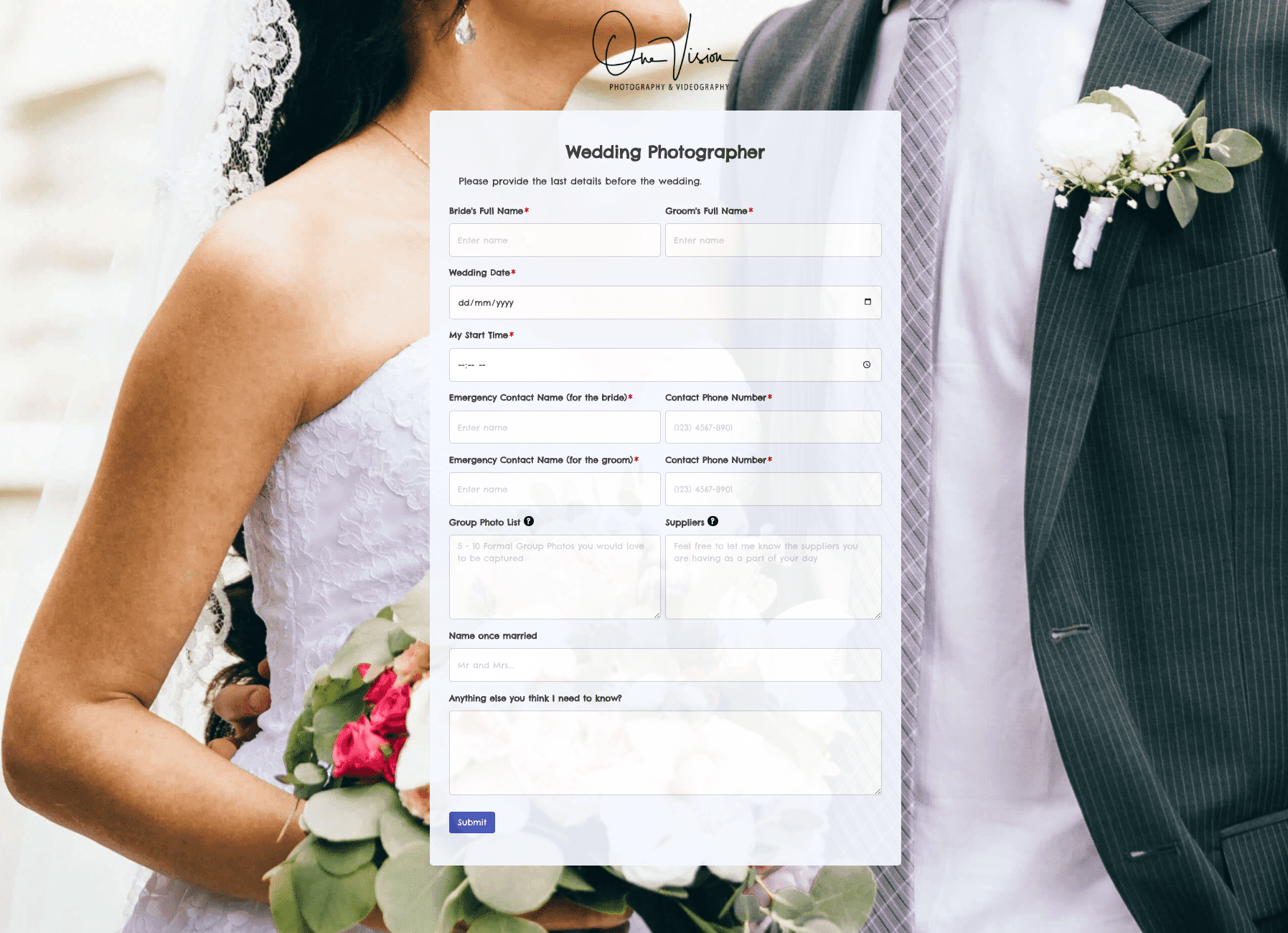

Wedding Photographer Form

Wedding Photographer Form

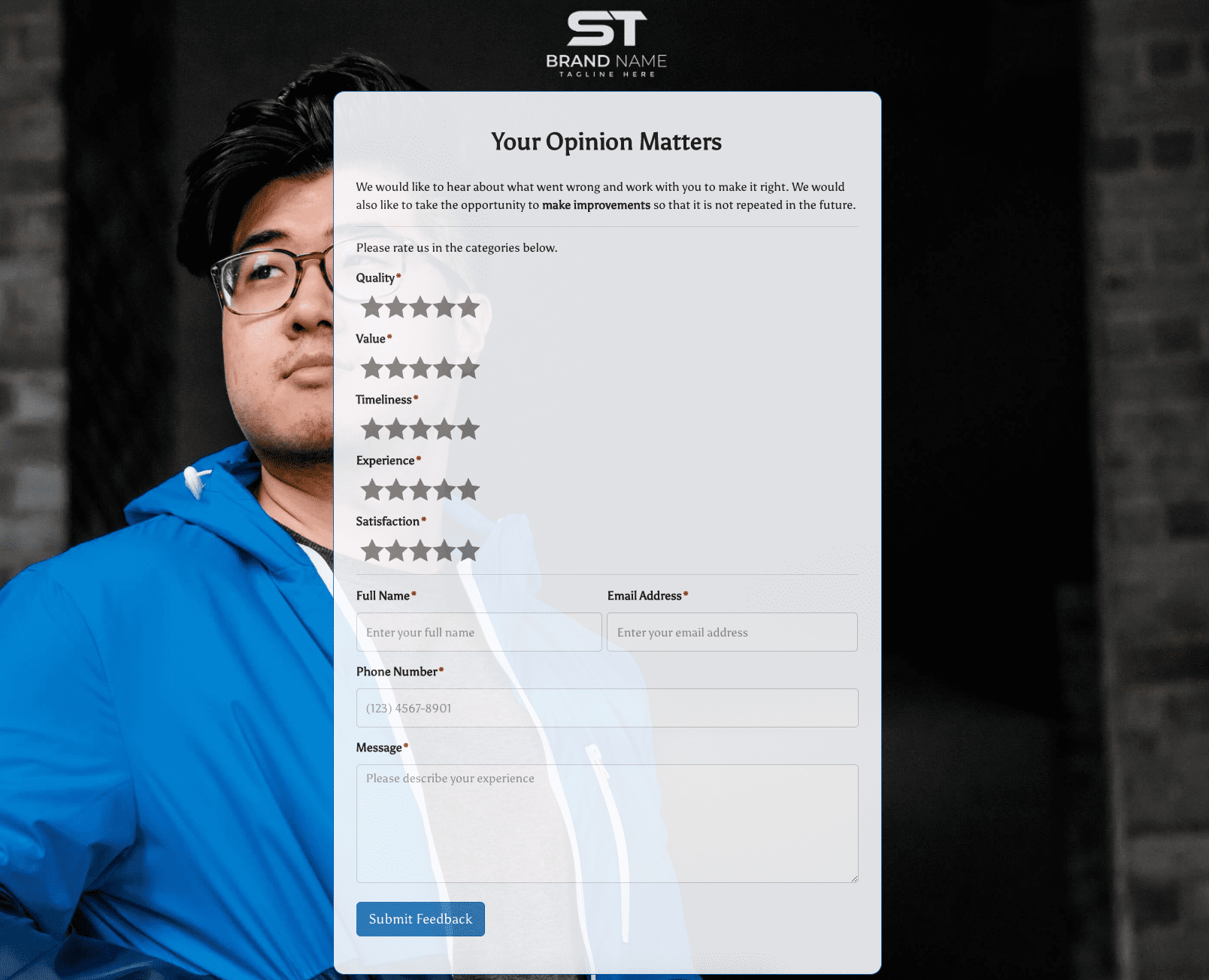

Your Opinion Matters Form

Your Opinion Matters Form

.jpg)